Hi Eifert (and all),

I've been recently testing the marker detector (which works pretty well for camera placement) in order to place virtual objects in my Unreal scene (with Broadcast DE).

What I thought would be an easy setup is proving more complicated than I anticipated.

From my understanding, the marker detector gives the relative location/orientation of the marker center from the point of view of the camera looking at it.

So, I thought :

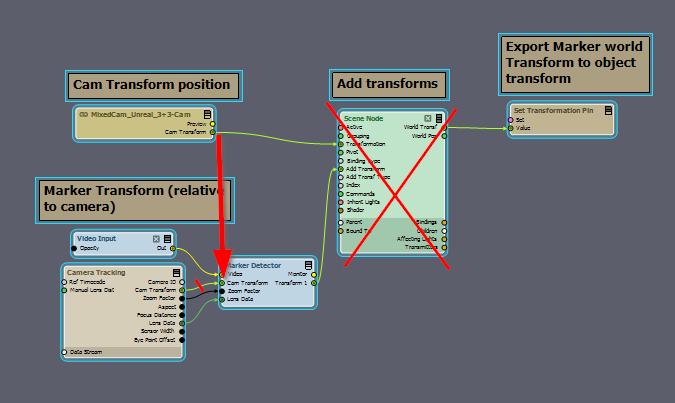

Camera Origin transform + Aruco Marker transform relative to camera = Marker world transform.

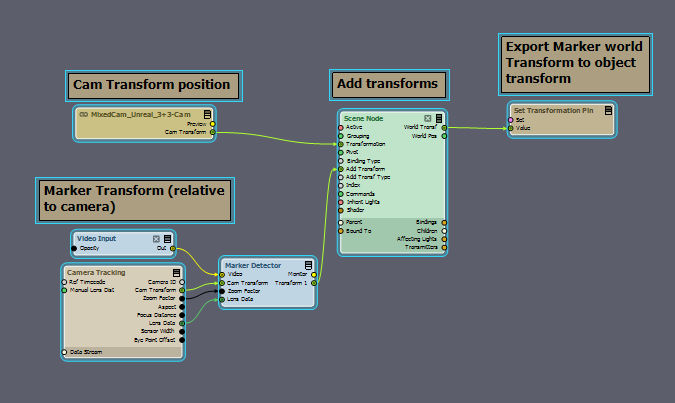

In the flow editor, I proceeded as follow in a simplified way :

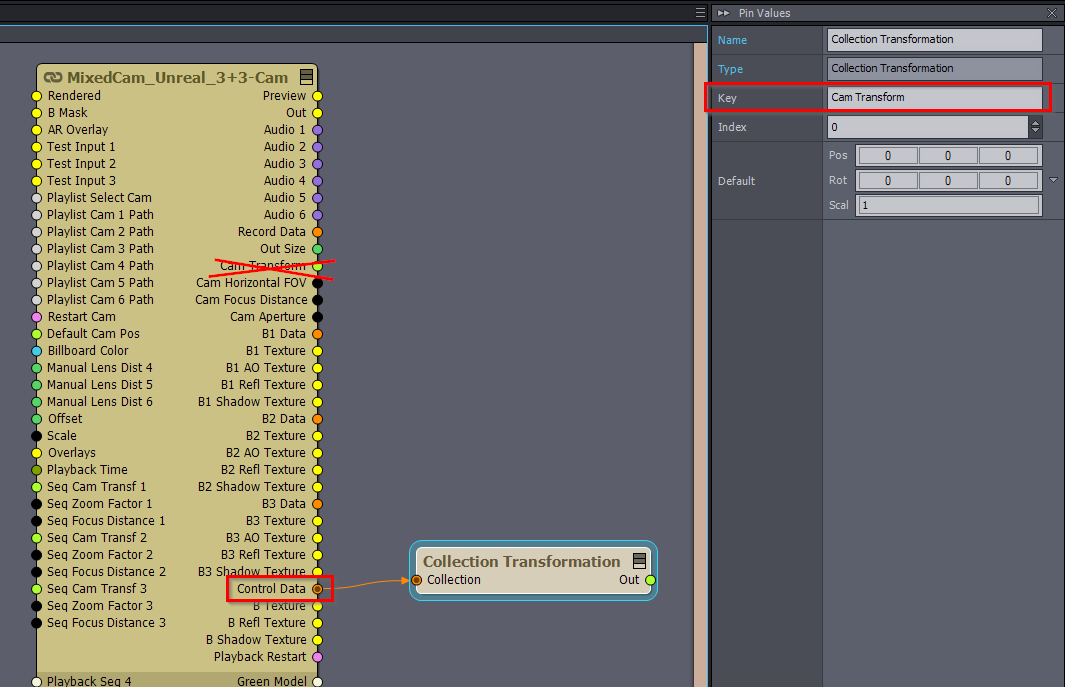

The trouble is I get inconsistent results between the "Cam transform values", "origins", "scene node transform binding types", "add Transf Type" etc...

For instance having the same marker for the center of the scene (detect origin in TRK inputs) and object to place should return a zero (or near zero accounting for rounding errors), which doesn't seem to be the case here.

So my 2 questions :

- Is there already a working compound that does this exactly ?

- If not, what am I doing wrong ?

Thanks for your help.

No one else tried to use the markers to place objects in the scene ?