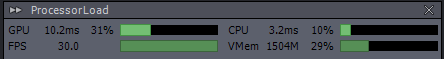

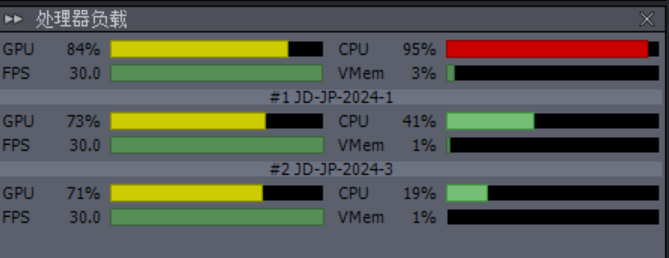

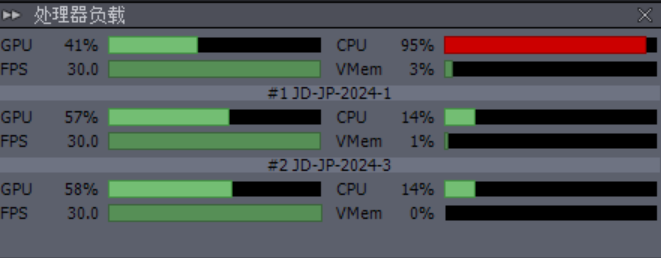

We used a server as the main synthesis server, and another server for rendering LED wall tests. We tested the Nvdia RTX 5000 ada card (with Nvdia Quadro sync) and the RTX 4090 card for rendering the LED wall. Our main server has an Nvdia RTX 5000 ada card with Nvdia Quadro sync and a Decklink 8k Pro, which we linked to external Genlock, tracking systeam, and cameras. Even though both the rendering server and the compositing server have 5000 ada cards and are linked to Genlock signals, we found that the animations within the LED wall and the external digital extension animations could not be seamlessly linked together. Internal and external animations appear at the wrong time.This is consistent with the results we obtained by rendering with a 4090 card without frame synchronization.

Therefore, we suspect that frame synchronization is not working with UE5 or Aximmetry, but if we unplugged the frame synchronization, Aximmetry would also display an error.

Than I would like to ask what additional settings are needed to synchronize animations between different machines in UE5? Is it impossible for animations to be synchronized between different machines? If not, what is the difference between using consumer-grade graphics cards and professional graphics cards?Or did we miss something about Genlock in Aximmetry?

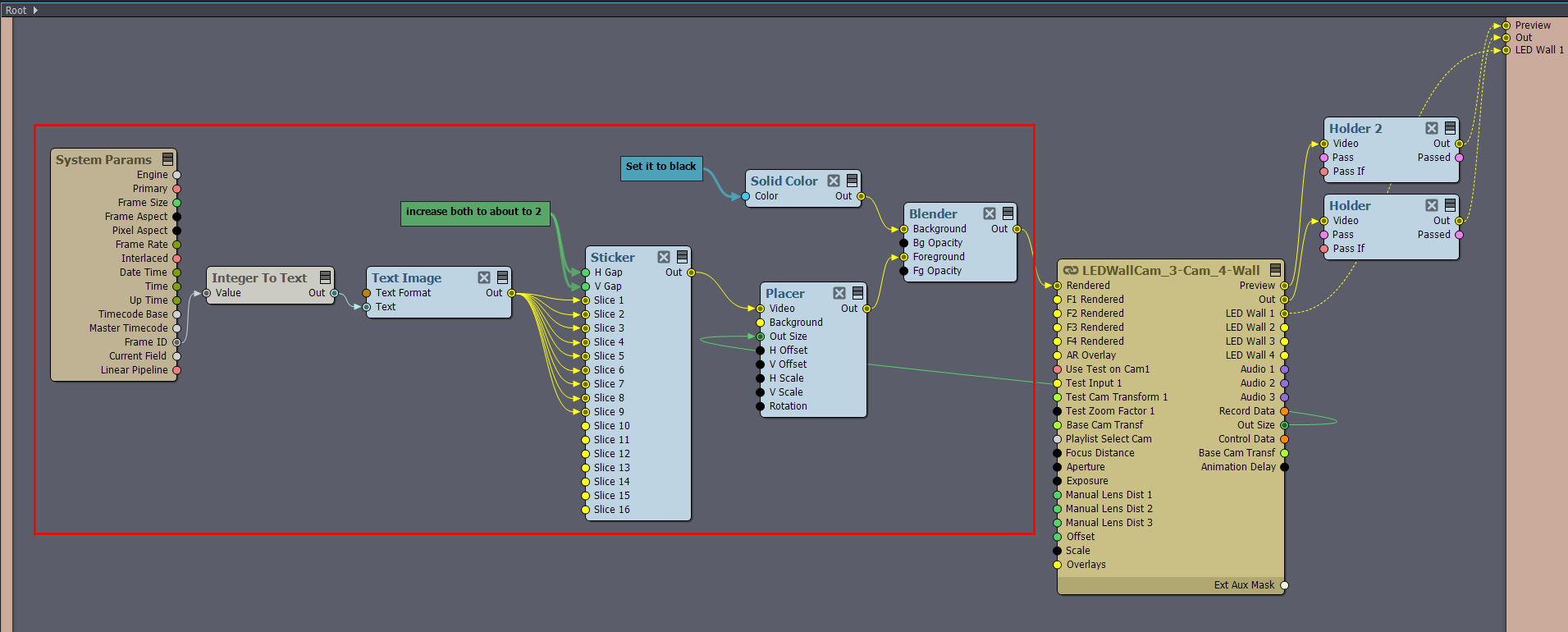

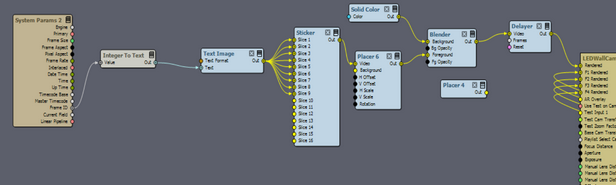

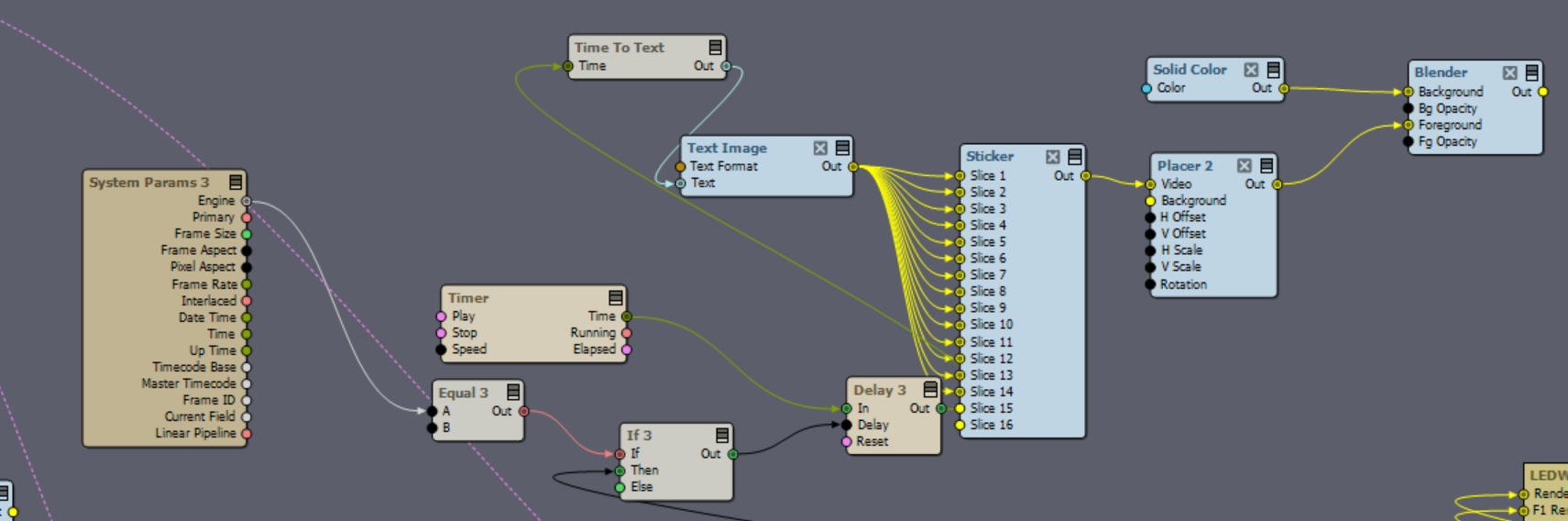

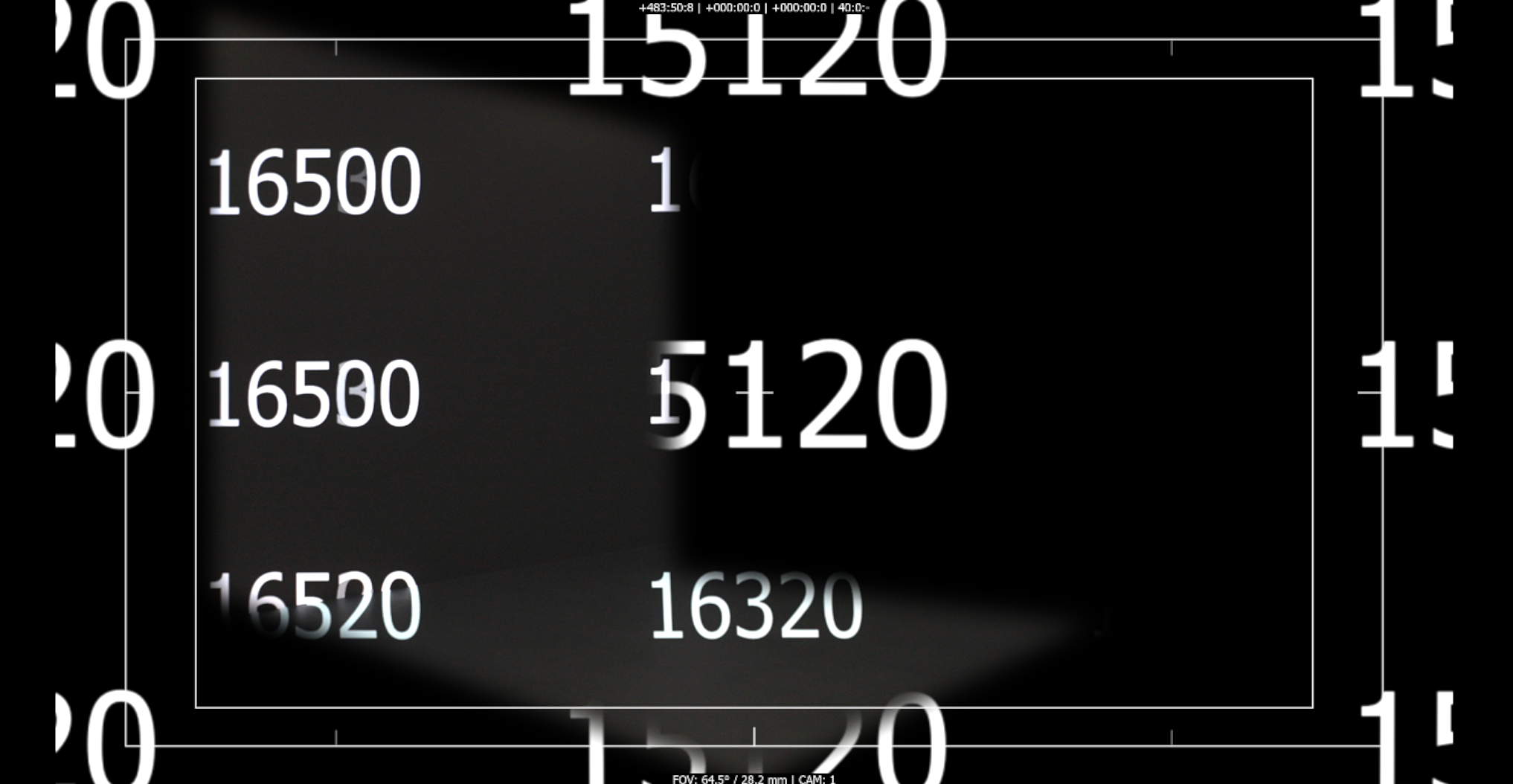

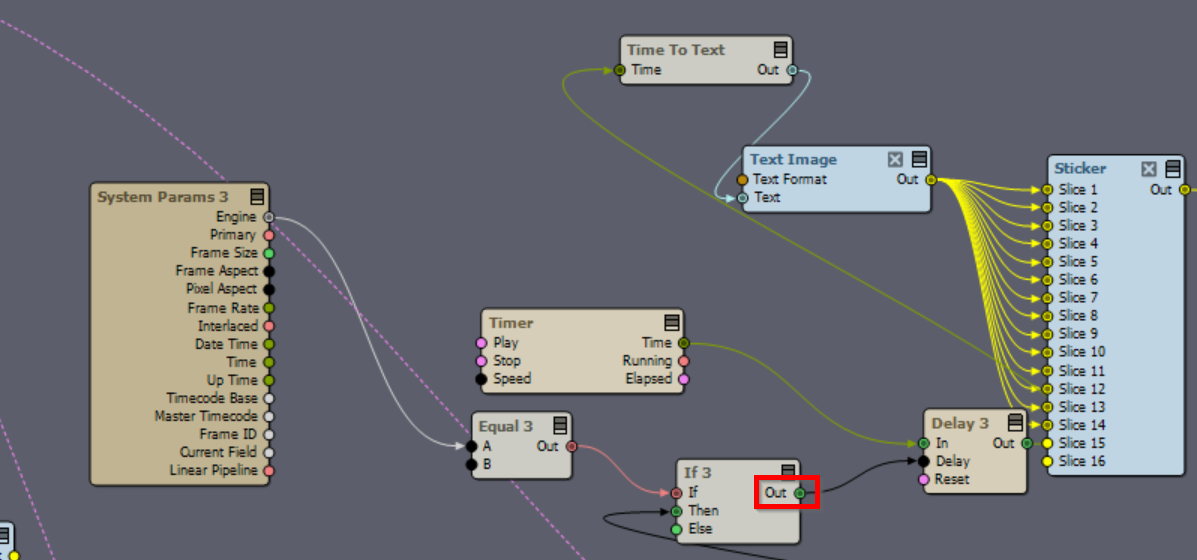

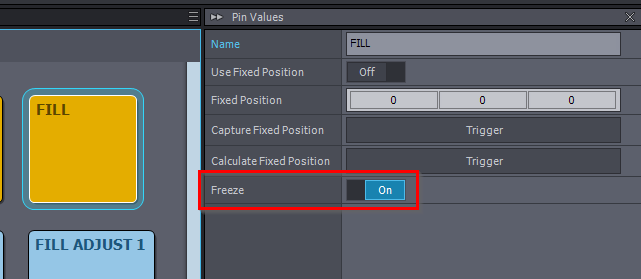

In the above image's red rectangle, a video image is rendered with the frame index number displayed multiple times. This image is then connected to the LED Wall compound. In your studio, position the camera so it simultaneously captures (frustum) both the LED Wall and the Digital Extension.

In the above image's red rectangle, a video image is rendered with the frame index number displayed multiple times. This image is then connected to the LED Wall compound. In your studio, position the camera so it simultaneously captures (frustum) both the LED Wall and the Digital Extension.

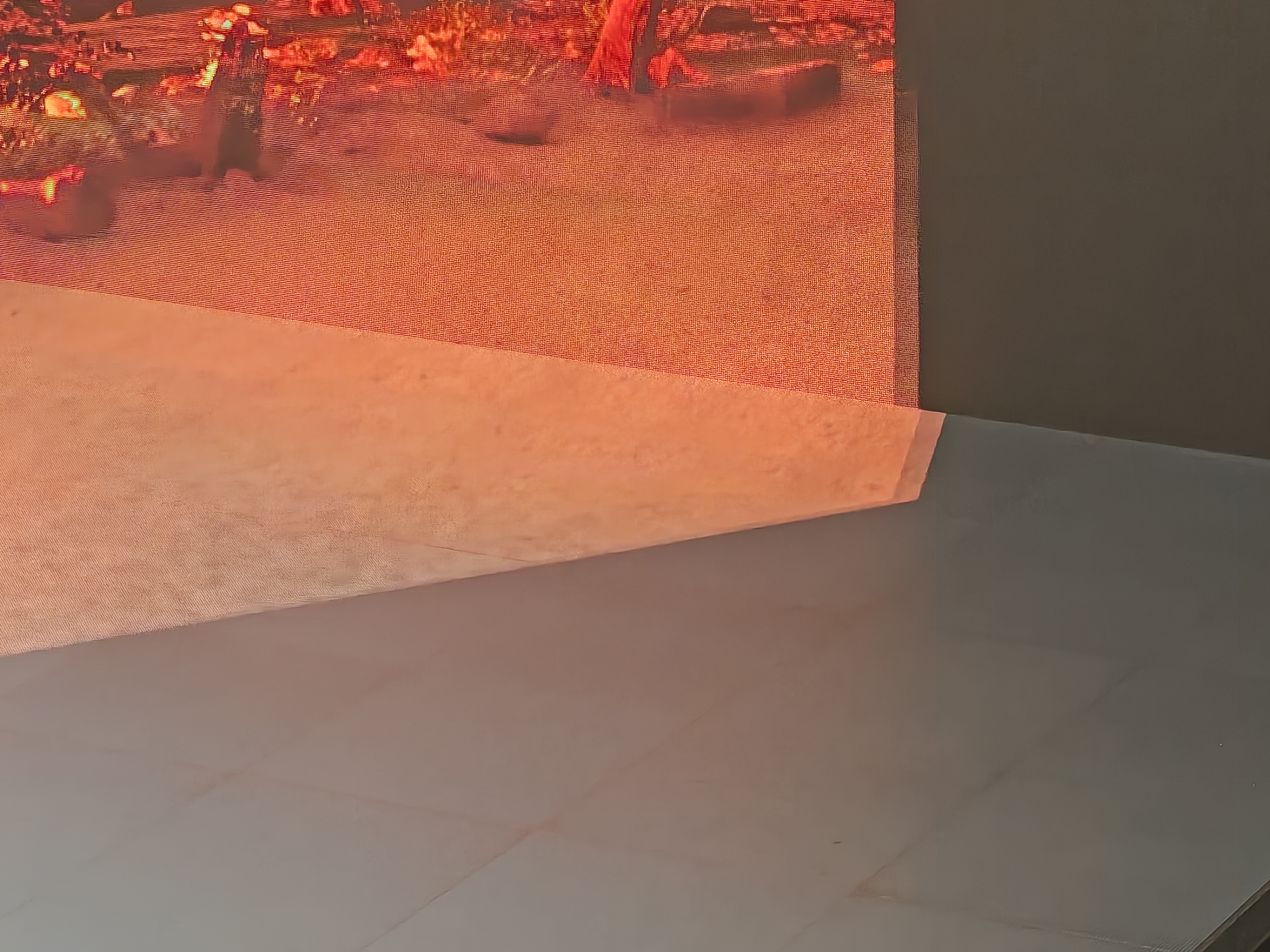

Also, when we use Redspy to pan the camera, we're seeing this slight delay at the edges.

Also, when we use Redspy to pan the camera, we're seeing this slight delay at the edges.

Hi,

You can synchronize animation between different machines and also between the Digital Extension and the LED walls. However, the latter won't happen automatically.

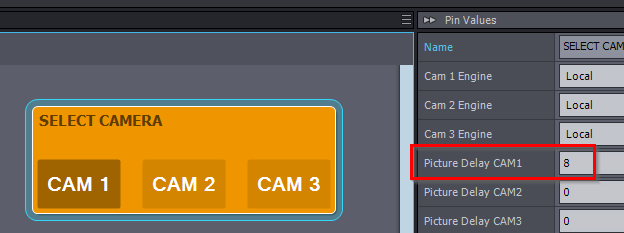

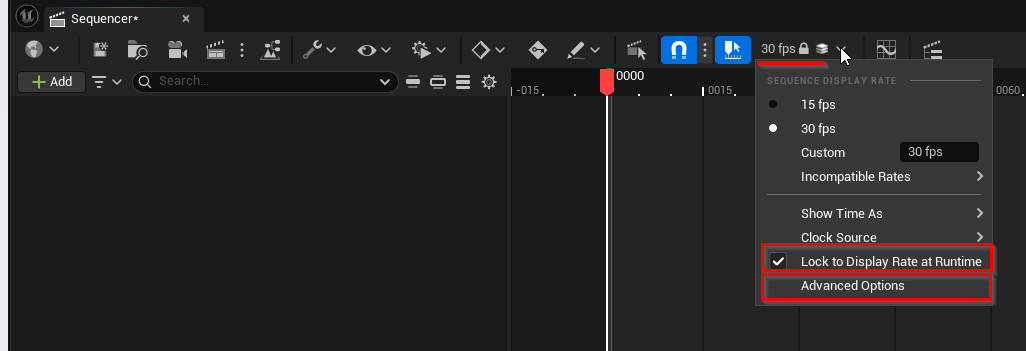

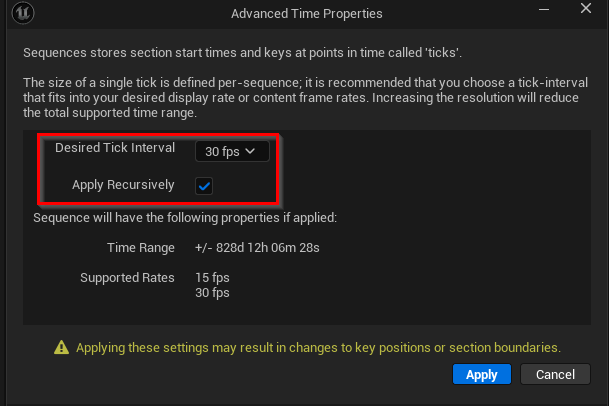

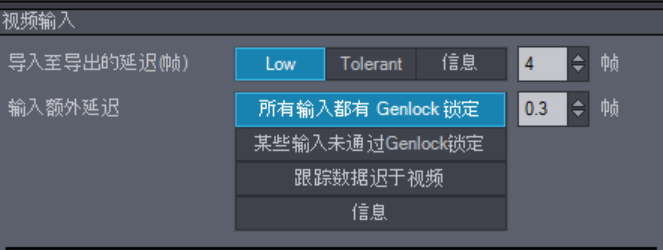

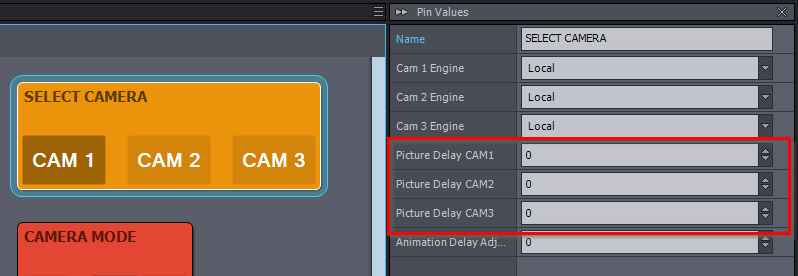

You are likely experiencing the animation being out of sync with the Digital Extension because you don't have a Picture Delay(s) set:

If you don't set a Picture Delay and leave it at 0, the Digital Extension will fit nicely on your LED wall's image. However, the animation will differ between the LED wall's image and the Digital Extension's image (meaning the animations/movements will occur sooner in the Digital Extension). Additionally, switching between cameras will be out of sync.

If you set the correct Picture Delay, then animation and switching between cameras will be in sync. However, the Digital Extension fitting around the LED Wall will be less accurate. If you render the Digital Extension separately, then the animation and switching between cameras will be in sync, and the Digital Extension fitting will be accurate. However, you will need at least two machines and must set the delay between animations or events in the Flow Editor.

You can read how to use two machines like that in the Switching Between Cameras paragraph in the documentation: https://aximmetry.com/learn/virtual-production-workflow/preparation-of-the-production-environment-phase-i/led-wall-production/using-led-walls-for-virtual-production/#switching-between-cameras

Note that Nvidia Quadro Sync is not yet fully supported. Your ADA cards will still be genlocked, but each machine might have a slightly different delay. This can become a visible issue when using more than one LED wall. However, in the Digital Extension, you probably won't be able to notice a one-frame delay.

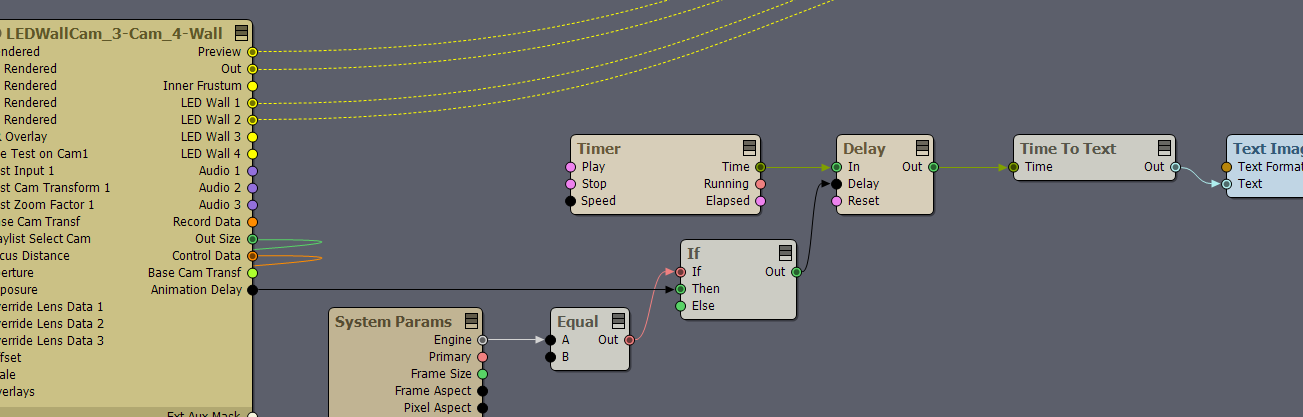

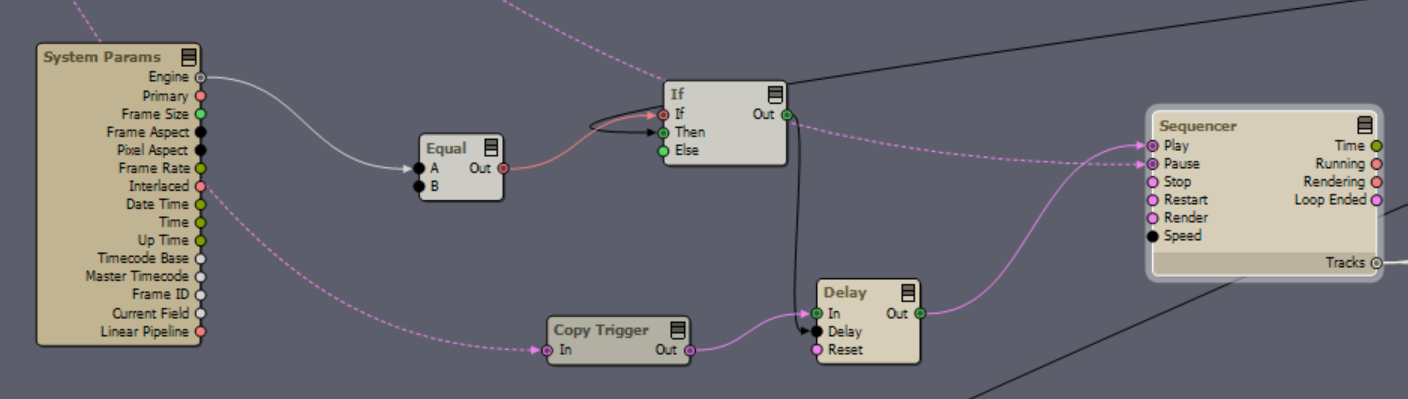

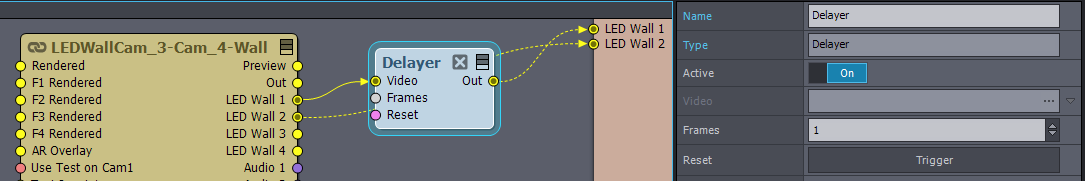

You can fix such delays by adding a one-frame delay using a Delayer module to the LED wall output that is not lagging behind:

Note, that in a future release of Aximmetry, Nvidia Quadro Sync will be fully supported. Additionally, there will likely be an automatic Picture Delay calculation option and the Animation Delay process will be simplified.

Warmest regards,