Multi-Machine Camera Tracking:

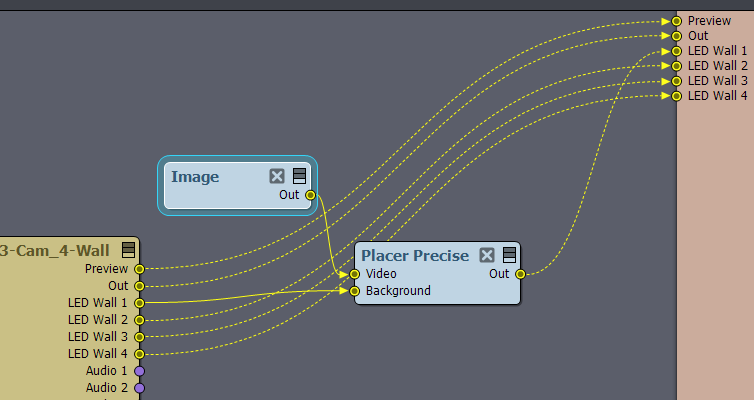

We have successfully set up a multi-machine system but are encountering limitations in tracking two different cameras on two different computers simultaneously. The camera tab in the LEDWall tool currently only allows for switching between cameras, not rendering two different ones concurrently. Despite this, we can output two distinct tracked Unreal feeds from the two computers.

Our goal is to have one computer render from one camera and the other from a different camera. We’ve tested this by using the Composer app on both computers and feeding one computer’s feed to the other, and it works, but it’s not a streamlined solution. The tracking information is available to both machines, but we need a way to specify that one machine tracks one camera while the other tracks a different camera. Is there a way to achieve this? Could a composition or script be created to enable this functionality?

Outer Frustum Control (Fill Control):

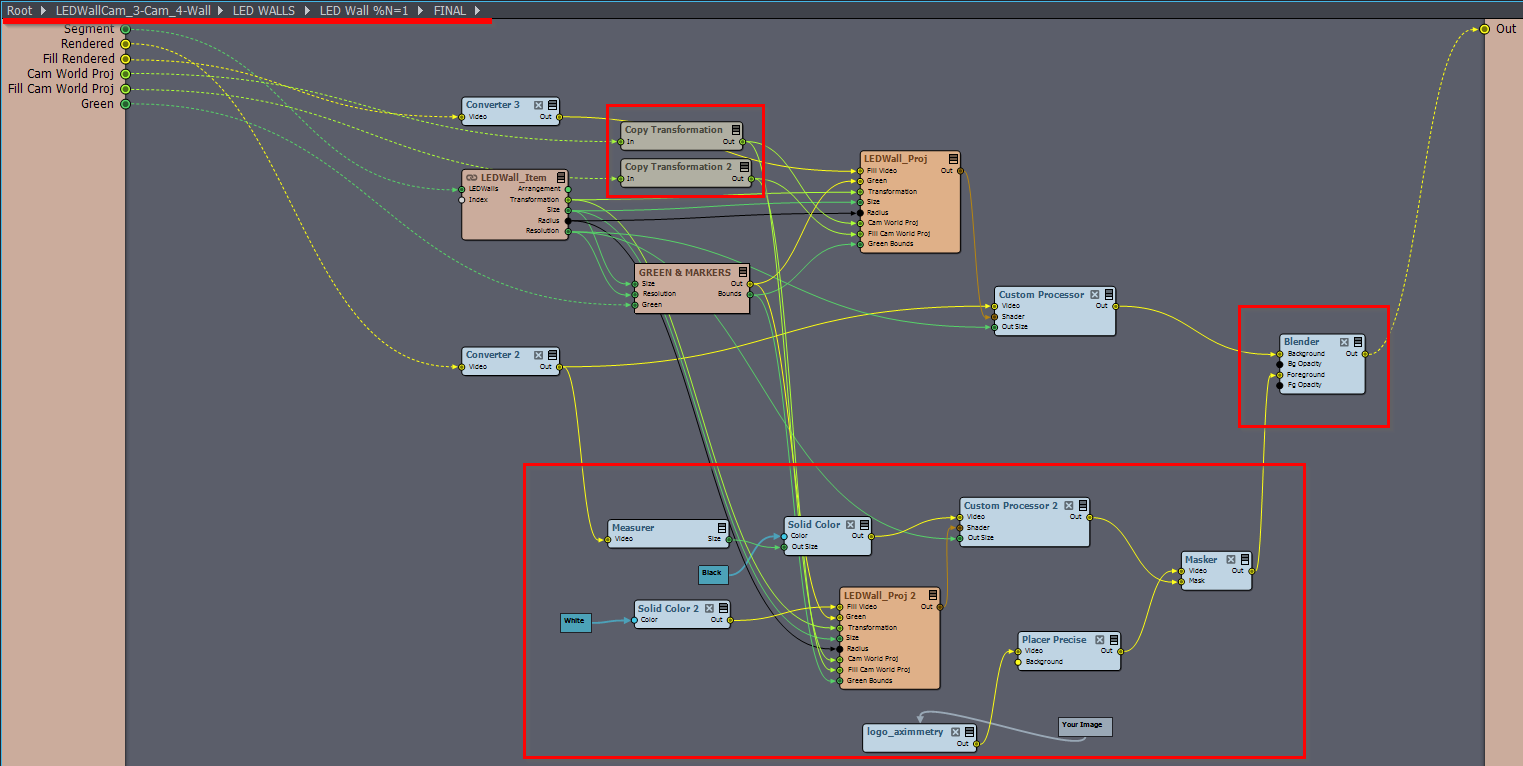

We need to control the outer frustum (fill) with a custom image or video to minimize flickering. Using different outer frustums increases flickering for the talents. While we can feed the fill with an image or video, it gets distorted by the wall setup. Our goal is to feed the entire canvas with a video source without any wrapping or distortion. Currently, feeding the fill with an image or video results in an offset display, likely due to how the software handles the input. How can we make the fill display content accurately, without the software assuming it needs to wrap around or adjust the feed?

Displaying 360 Content on a 180-Degree Wall:

We are exploring the best way to display 360 content on a 180-degree circular wall. This is important for some of our creative filmings. What is the recommended approach for achieving a seamless display of 360 content on a 180-degree setup? Any guidance or solutions you can offer would be highly valuable.

Note that this will not change what you see in the STUDIO preview; it will only affect the image that goes out to the LED Wall.

Note that this will not change what you see in the STUDIO preview; it will only affect the image that goes out to the LED Wall.

Hi,

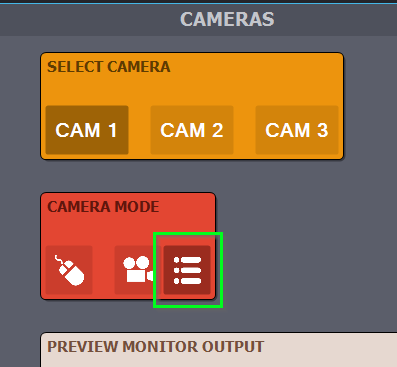

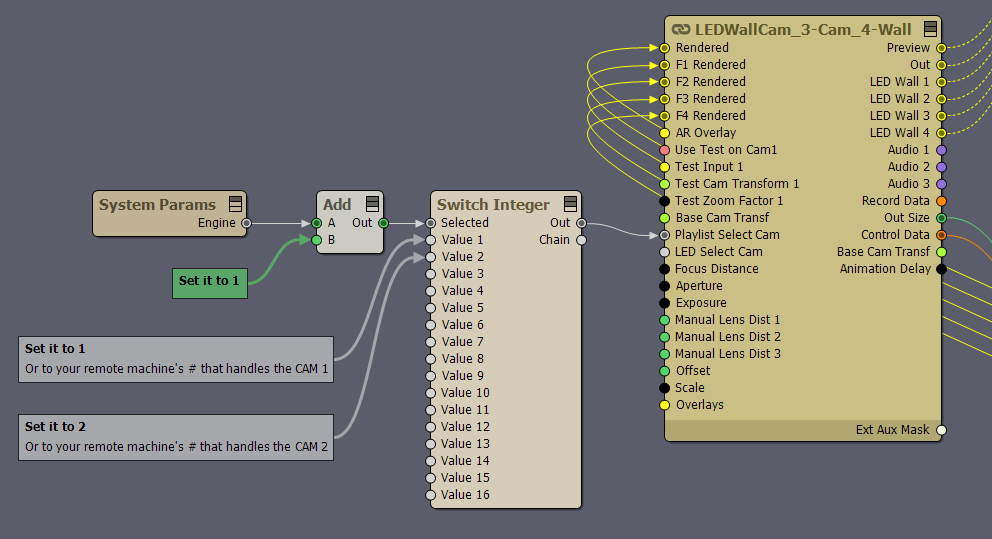

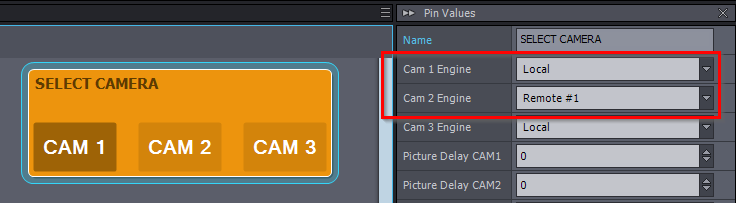

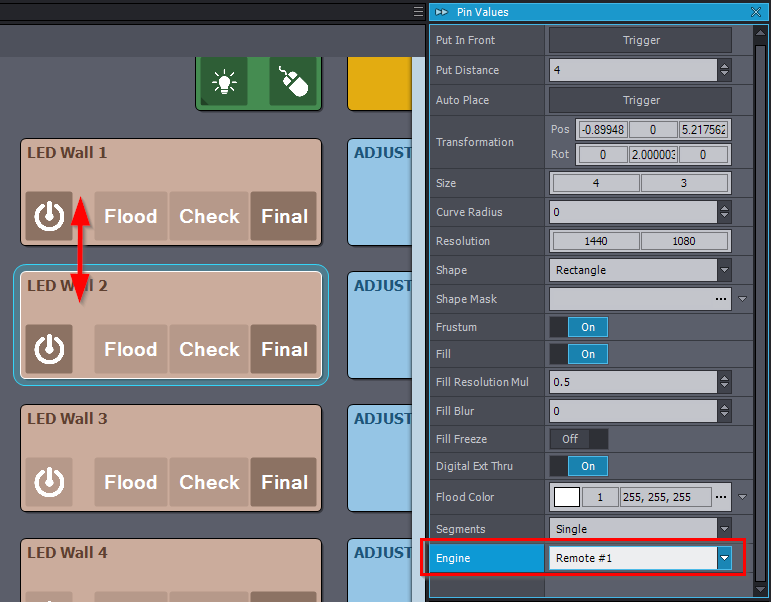

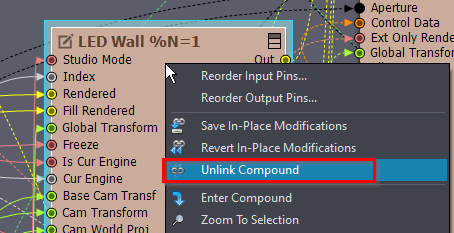

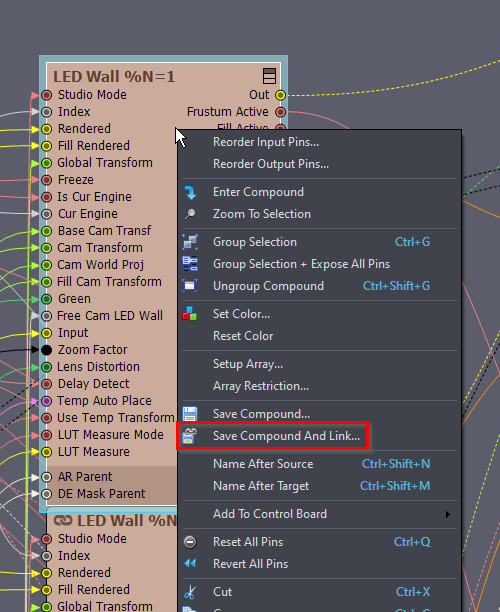

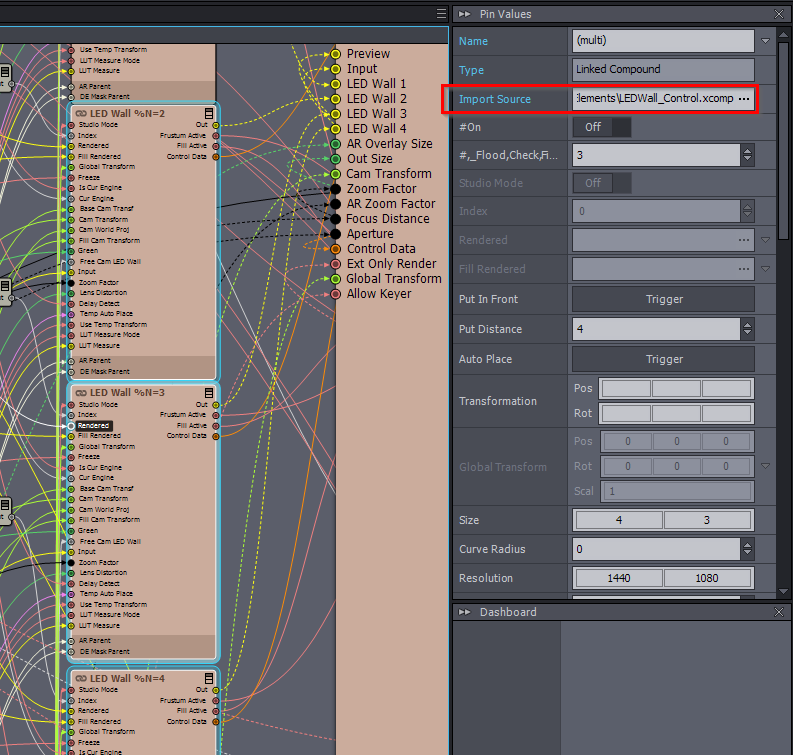

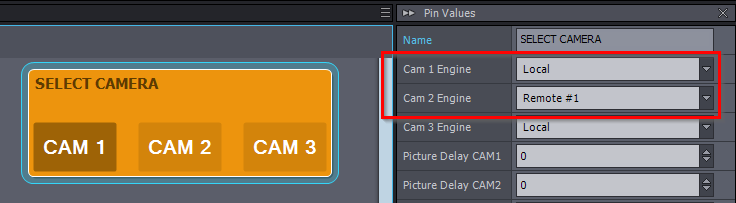

The biggest problem with rendering two different camera perspectives concurrently on the LED Wall is that the LED Walls display one image at a time. There are some ways to partially mitigate this fundamental limitation of LED Wall productions. For example, having very careful camera operators who ensure their cameras' perspectives never cross. We might be able to provide a solution depending on how you want to overcome this limitation.Also, note that the latest version of Aximmetry (2024.2.0) supports camera inputs running on different remote computers in LED Walls:

This allows switching between them and not concurrent rendering.

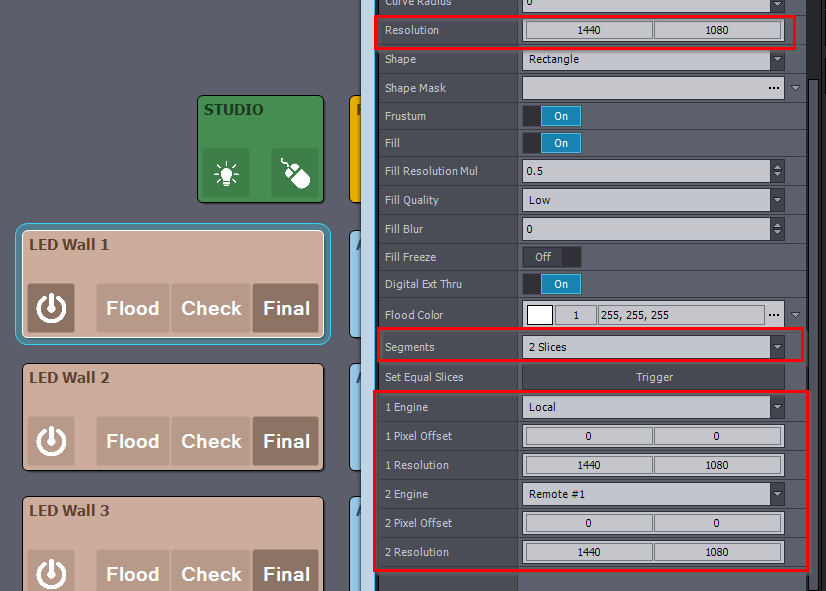

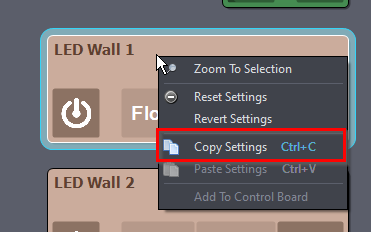

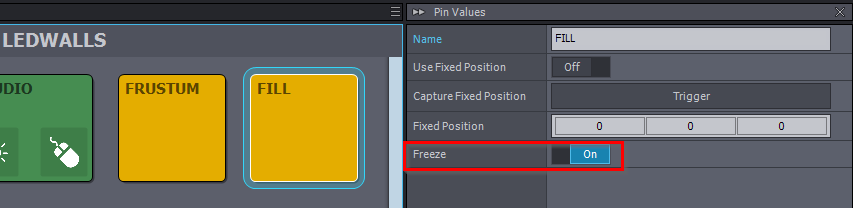

Regarding the Fill control, there is an option to Freeze the Fill:

This should stop the flickering, but it will also stop the Fill from updating.

You can also do this per LED Wall in the LED Wall panels, instead of applying it to all LED Walls in the above image.

If you are experiencing flickering when getting too close to the LED Wall or being enclosed by it, you can fix it by using multiple LED Wall panels, then use a sticker module to stitch them together before outputting them. If you can describe in more detail the kind of flickering you experience or show a video of it, I can better determine if this solution is appropriate. If it is something else, I am still confident that we can come up with a solution that won't require you to change the entire Fill.

Regarding the 360 content, I am assuming it is a video or video stream. You can make a skybox from it, as discussed here: https://my.aximmetry.com/post/1951-is-it-possible-to-use-a-360-degree-image

There is even more information about using 360 content for LED Wall production here: https://my.aximmetry.com/post/2527-360-degree-video-on-led-wall

Warmest regards,