Hello- How does Aximmetry determine the bit depth in a workflow from input to output? For example, if I set the output on a SDI port to 10-bit, but the input live camera capture to set to Auto (which defaults to 8-bit), - what is the net result of this configuration? Additionally, what happens if I set the input/capture to 10-Bit, but the render output to 8-Bit?

I know, ideally I should have these be the same, but I found that if I set all ports to 8-Bit, I don't have a problem with GPU and CPU overloading and my frame rate stays steady at 24fps. If I set either of these to 10-Bit, (or both to 10-Bit) my GPU and CPU gets overloaded. From my reading/research, for keying, I would want 10-Bit to get the best possible key. I'm using SDI ports for input and output.

In the end I probably need a new GPU but want to know the internal specifics so that I can understand and troubleshoot with some confidence, and justify the expenditure of a new GPU and maybe a new CPU/computer as well.

thanks

Hi,

The increased GPU cost is likely due to GPU bandwidth overload. This can occur when converting the SDI signal into a format suitable for video processing, and then converting it back into an SDI video signal. During these conversions, the higher bit depth format plays a role in increasing GPU load. Once the video is inside Aximmetry, processing in 8-bit or 10-bit does not significantly affect performance.

Setting the output to 10-bit only changes the final SDI signal's bit depth; it does not impact the bit depth that Aximmetry or the Flow Editor operates in. However, you do not need to worry about anything being rendered at a lower than 10-bit depth, as by default, the Aximmetry camera compounds render scenes at a minimum of 10-bit depth.

Ideally, you should not set both input and output to the same bit depth without considering your workflow needs.

For input, use 10-bit if your camera supports it, as this generally provides better image quality. The higher bit depth may benefit not only keying but also other aspects of your production.

For output, set it to 10-bit only if you need it—for example, if you plan to do post-production color grading that would benefit from 10-bit, or if your content will be broadcast in HDR. Note that HDR involves more than just bit depth. For more details on HDR and setting it up in Aximmetry, refer to this guide: https://aximmetry.com/learn/virtual-production-workflow/setting-up-inputs-outputs-for-virtual-production/video/hdr-input-and-output/#unreal-engine-setup-for-ar-production

If you "set the SDI output to 10-bit, but the input live camera capture is set to Auto (which defaults to 8-bit)", the captured video brought into your virtual scene will be limited to 8-bit quality. However, the rendered virtual environment around it will be in a 10-bit quality. How these two sources are combined depends on your production setup and the specific camera compound settings you use in Aximmetry.

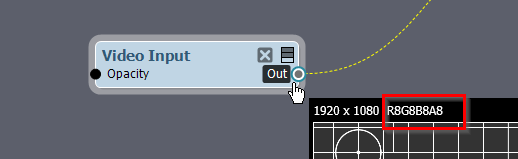

Are you sure it defaulted to 8-bit? If you have set the Video Input's Mode to Auto, Aximmetry should automatically detect and process a 10-bit signal. If you use a Video Input module to receive this SDI signal in the Flow Editor and peek at the video, you should see a format higher than R8G8B8A8 shown here:

The R8G8B8A8 format indicates standard 8-bit color channels—8 bits for Red, 8 bits for Green, 8 bits for Blue, and 8 bits for Alpha (transparency).

Warmest regards,