Hi,

I would like to ask if there is any module or a combination of modules available that can extract color values from an image or video, similar to how MadMapper performs pixel mapping

Hi Eifert,

Thank you for your quick and detailed explanation!

If I want to select a specific region from a video to feed into Measurer, is there an easier method than using the placer and manually adjusting parameters?

By the way, I’m currently researching ways to send RGB or intensity values via the OSC protocol into my QLC+, which handles DMX. MadMapper is also an option, but for some reason, I’m exploring alternative approaches for now.

Many thanks,

Moon

Hi Moon,

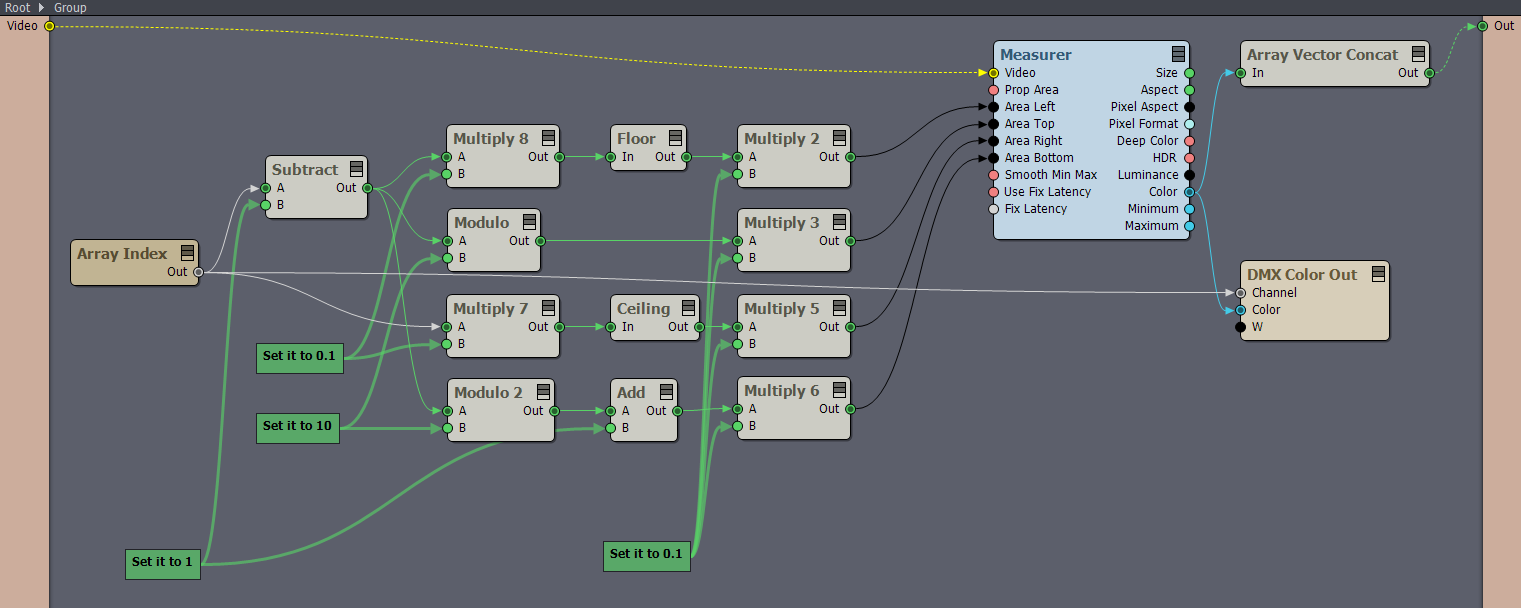

In the images above, I am following the same workflow you would use with the Placer module, but instead of cropping the video, I am using the Area input pins of the Measure module.Are you sure you need QLC+? Aximmetry can send DMX signals directly. However, configuring DMX control in Aximmetry can be more challenging than in dedicated lighting control software like QLC+, especially if you are more familiar with QLC+ than with the Aximmetry Flow Editor.

How many DMX lights or pixels are you working with in total? Knowing this will help me determine how many Measurer modules you will need.

Warmest regards,

Hi Eifert,

Thank you very much for your swift reply.

I’ll try experimenting with the Placer Area module as you suggested.

To give you a better idea of my current setup: I use about 24 lights in my Chroma studio DMX system. Specifically, 6 bi-color lights on top for base lighting, 3 RGB lights for the back lighting, 6 bi-color lights illuminating the green screen, 4 bi-color lights on the ground for key and fill, plus some additional RGB lights for supplementary effects.

I’ve tried several lighting apps, including MadMapper, and found that QLC+ is excellent for configuring custom fixtures and organizing scene-based lighting, even though it’s open-source. However, it lacks dynamic lighting features that interact with video or images in real time.

I understand that Aximmetry supports DMX control, and if it fits my needs, I’m open to integrating it. Most of the DMX-related information I’ve found on Aximmetry focuses on Unreal Engine 5 integration, but I’m aiming to build a 2D or 2.5D virtual production setup where cameras are on tripods and only provide PTZ values.

If you have any advice or learning resources relevant to this kind of setup, I would greatly appreciate it.

Sorry for the lengthy message, but I wanted to share the full context. Thanks again for your help.

Best regards,

Moon

Hi Moon,

It seems I bit misunderstood your setup earlier. I thought you might be working with an LED panel lighting where you would need to control each individual LED pixel using DMX channels.Warmest regards,

Hi,

The latest version of Aximmetry (2025.3.0) includes a new DMX_Pixel_Mapper.xcomp helper compound. This is very similar to what I was describing in my first post with the screenshots.

You can find more information here: https://aximmetry.com/learn/virtual-production-workflow/setting-up-inputs-outputs-for-virtual-production/external-controllers/pixel-mapping-via-dmx/

Warmest regards,

Hi,

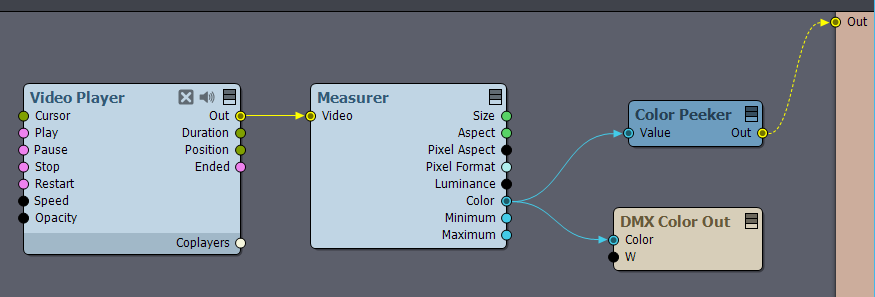

In general, the Measurer module can extract color values from video:

Note that color output pins from the Measurer can be directly connected to vector input pins if you need the color data as a vector (a group of numerical values representing the color channels).

If you want to measure color in multiple areas of the video, you can create an array compound—essentially grouping multiple Measurer modules together. More about array compound here: https://aximmetry.com/learn/virtual-production-workflow/scripting-in-aximmetry/flow-editor/compound/#array-compound

For example, with an array of size 100, you can divide the video into 100 separate regions, with each Measurer handling one region:

In the above array compound (Group x100), divide the measuring into 100 areas:

Keep in mind that using dozens of Measurer modules will be quite resource-intensive. If you need to sample color values at the scale of many dozens, let us know so we can suggest a more performance-optimized solution if necessary.

Also, I think MadMapper can be used for various types of pixel mapping. To provide more specific advice, let us know what and how many devices or surfaces you intend to output the pixels on, which protocol you plan to use (for example, DMX), and what resolutions are required.

Warmest regards,