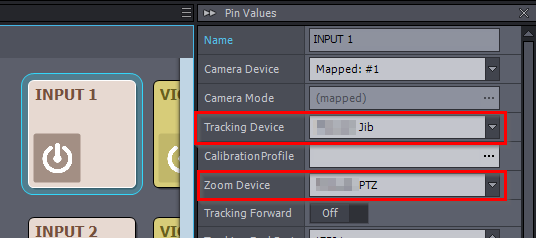

Apologies in advance if this question has already been covered elsewhere in the forum. (Please direct us to it if it has). Is there a suggested work flow to ingest the PTZ Free D tracking data from (for example) a Sony FR7 for head and lens position/rotation etc and mix it with the Free D tracking data from (for example) a Vive Mars tracker to track the cameras physical position in 3D space? The picture below shows a rig we've been experimenting with for a client for a green screen shoot next month. The jib is motorized and on a rotating 360 base. The FR7 is calibrated and mapped to a tracked camera in Broadcast DE. It's Free D data is on a different port to the The Vive Mars tracker, which is also calibrated and on it's own profile. Our question, is there a simple way to have the tracked camera use two different profiles? One for the PTZ data and the other for the PTZ's physical position in space? Or are we going about this all wrong and have 'engineered' ourselves into a weird corner. The end use case is to have two FR7s one on a floor mounted motorized slider and the other on a grid mounted slider, both attached to separate tracked cameras in our scene.

... Just noticed that the picture shows us using a Vive Mars FizTrack with this setup. Please ignore, we're using it as a FIZ motor only for remote Zoom control on this manual G Series lens, the servo lens for this kit went out on a job and we had to get creative with what was to hand. We're only interested in repurposing the Vive Trackers data and overlaying the PTZ data from the FR7.