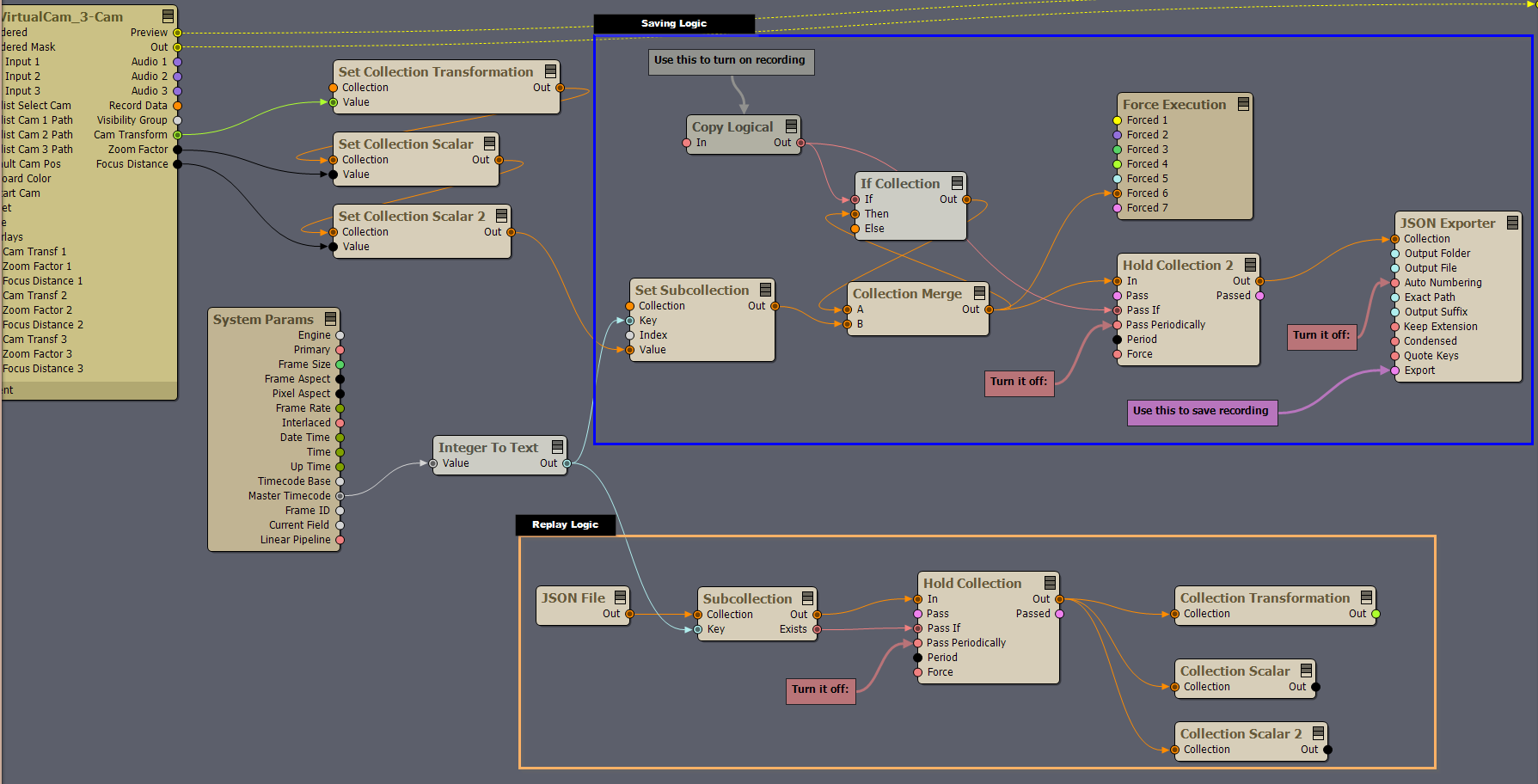

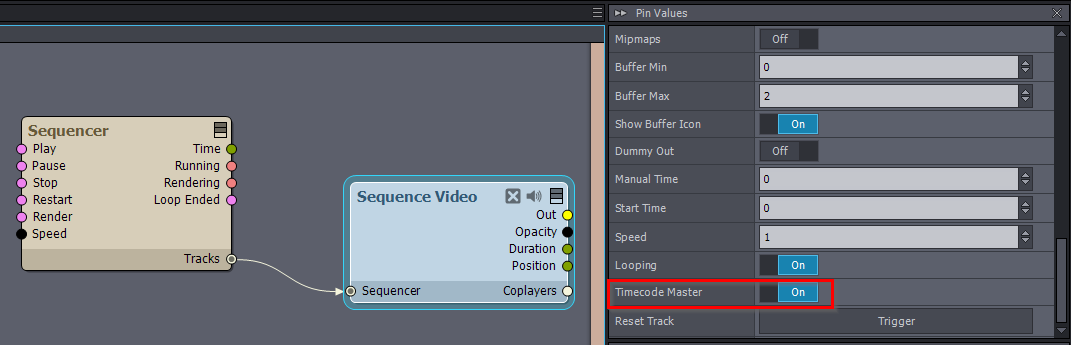

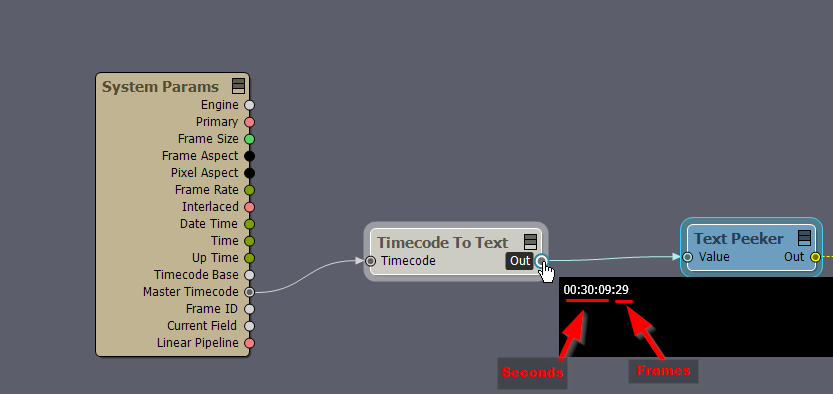

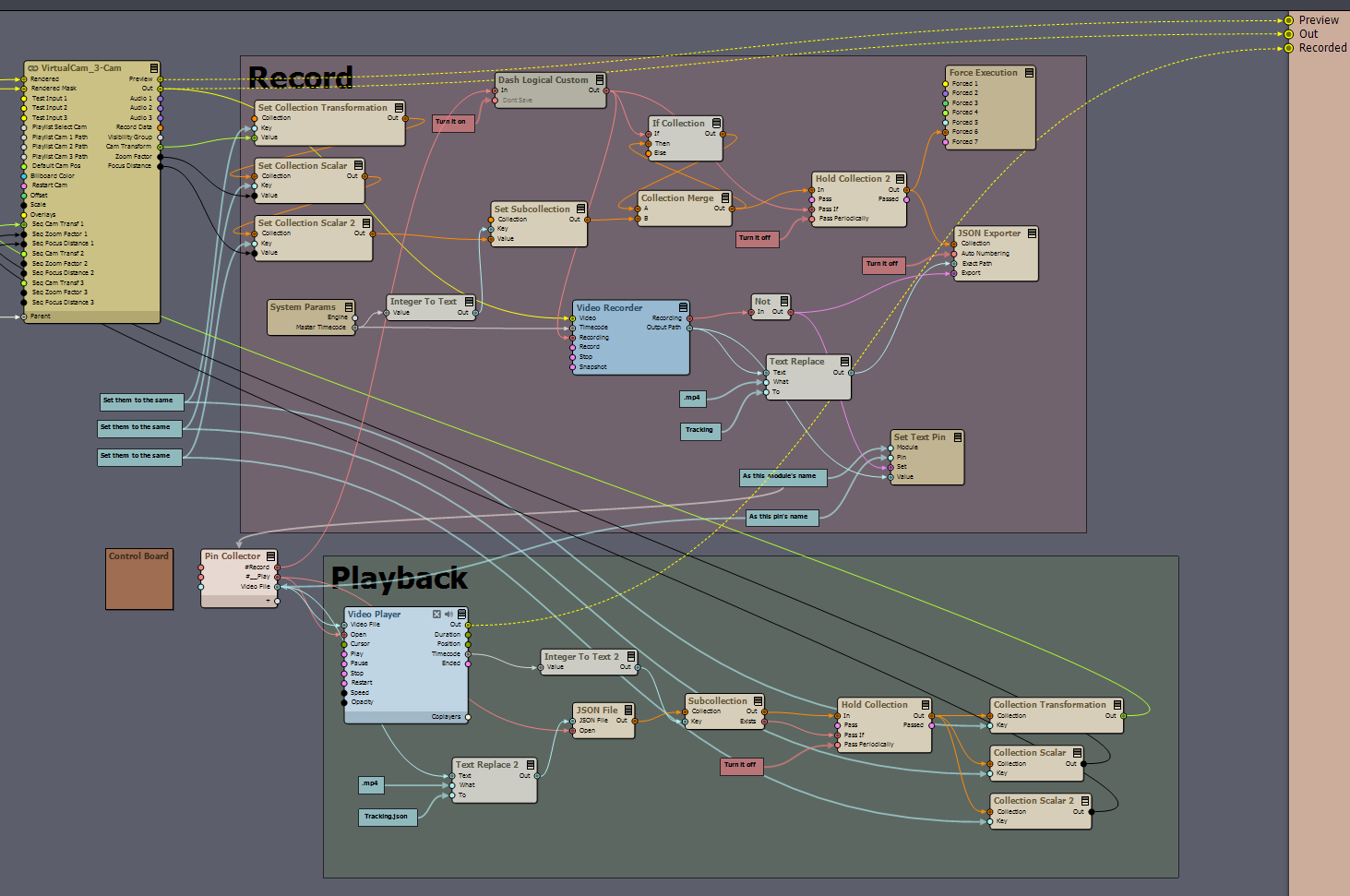

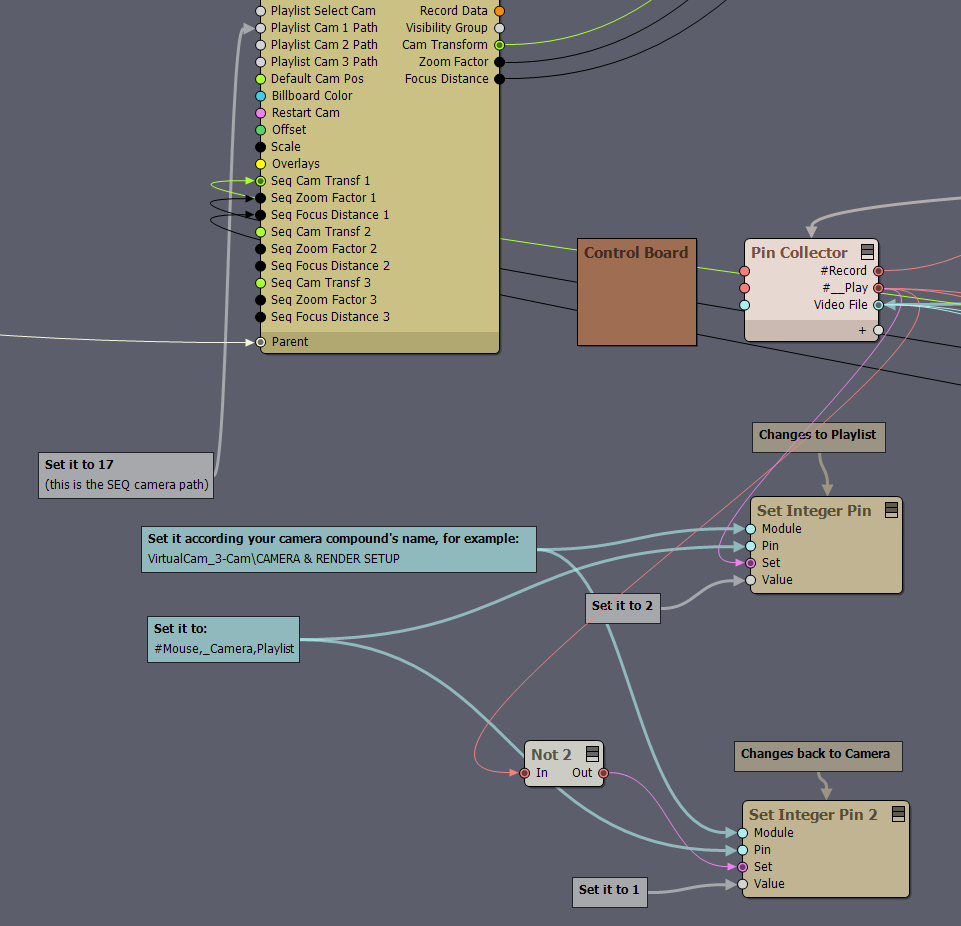

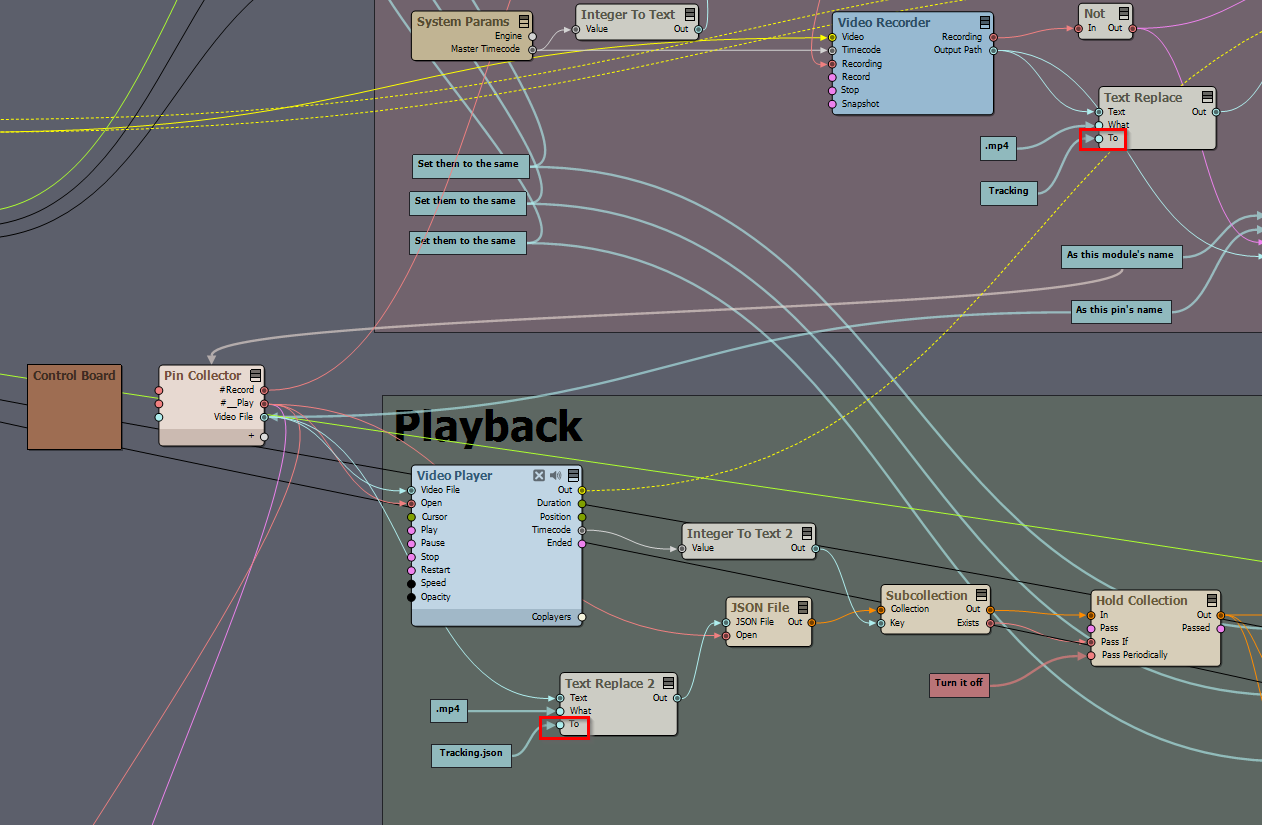

I am confused about starting a recording in the MixedCam compound. The record button can only be found in the TRK inputs control board and only records the tracked camera(s).

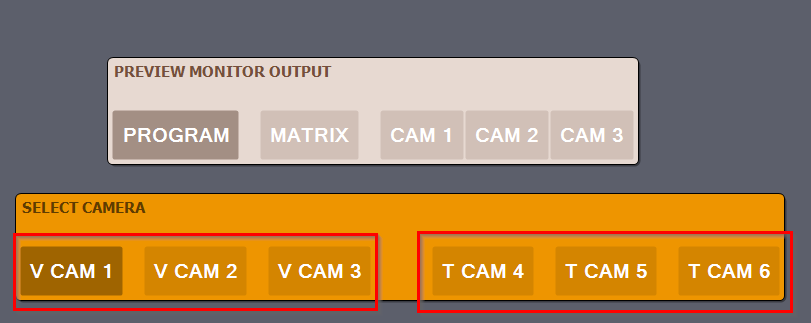

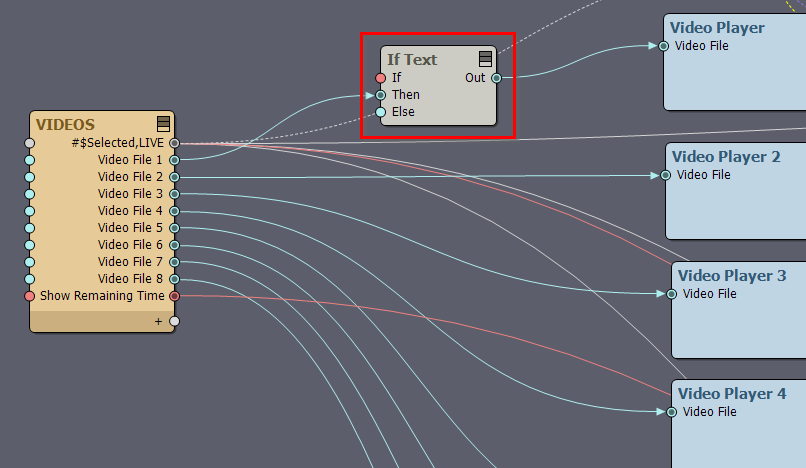

Furthermore why in the CAMERAS control board in the preview monitor output only three camera's are mentioned (instead of VCAM 1-3 & TCAM 4-6). Does this offer any benefits?

For example I would like to first do a virtual camera move and when the position of the virtual camera matches my tracked camera transition into selfie camera mode.