So as I mentioned in other posts we encountered some issues with using the Mixed Cam Unreal Compound. This got me thinking: Do I even need to use dedicated V CAMS in a live/live on tape environment on a a single workstation? Not doing this would avoid a few of these, namely glitches (1 incorrectly rendered frame) when switching from a V CAM to a tracked camera and the general inconsistency of control placement for similar controls between the two types of camera. So what are the implications of, say, just putting everything onto an 8 cam tracked Unreal compound here? Broadcast DE 2024.3.0.

Connecting a static camera to a tracked input, not giving it a tracking device and using cam transform and manual lens to match the virtual perspective to the real position and lens settings gives me the correct default framing. So what about the other things?

- Camera paths should work with the VR path controls, but can I put that on a sequencer? Will the movements triggered here be recorded as tracking data in the recorder of the tracked camera compound so I can play them back later for re-rendering?

- What about keying? Can I use the clean plate generator of a tracked camera input with a static camera?

- What about the use of billboards? In theory this should work fine, but does it?

- What about time code? The static camera currently does not have the correct time code embedded, nor is it genlocked (because reasons...). Can I force the recorder in the tracked camera compound to instead use the TC from a different camera (which would have the correct master time code for the whole system, provided by an external generator)?

Hi,

Yes, you don't necessarily need to use a V CAM with static cameras; you can use Tracked Camera compounds instead, although there are some limitations.

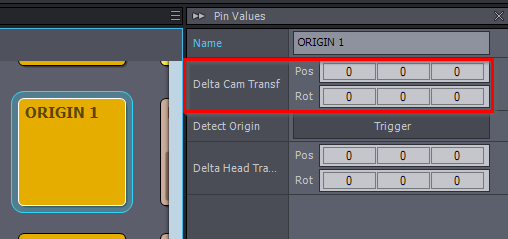

You can set the position of the static camera using the Delta Cam Transf parameter in the ORIGIN panel:

And use the manual lens parameters as you've mentioned.

Tracked camera virtual paths don't have their transformation pins exposed, so if you need to access those, you'll have to edit the camera compound.

Virtual camera movements won't be saved with the built-in recorder of the tracked camera compounds. It records tracking directly from the Camera Tracking module without modification of virtual camera movements or other similar settings.

However, if you want to rerender using Unreal Editor only (or other 3rd party software), you can use the Record_3-Audio compound to save the entire production’s final output camera transformation as an animated FBX file. More details can be found here: https://aximmetry.com/learn/virtual-production-workflow/setting-up-inputs-outputs-for-virtual-production/video/recording/how-to-record-camera-tracking-data/#final-composite-recording-1

It is frequently requested to add a feature that could record all of the production with every interaction and we have this high up on our request list.

The tracked camera has 3D clean plate, while the virtual cameras have a 2D clean plate, and they are not compatible with each other. So you won't be able to use the 2D clean plate in tracked cameras unless you edit the camera compound.

You will be able to use the Billboard just as in other normal tracked camera inputs. However, note that the billboards of tracked camera has different settings compared to the virtual camera's billboard.

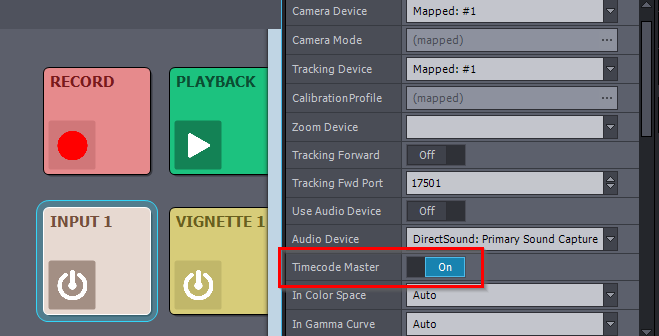

In the INPUTs, you can set which camera will provide the master timecode:

You most likely want one of the tracked cameras set as master.

Warmest regards,