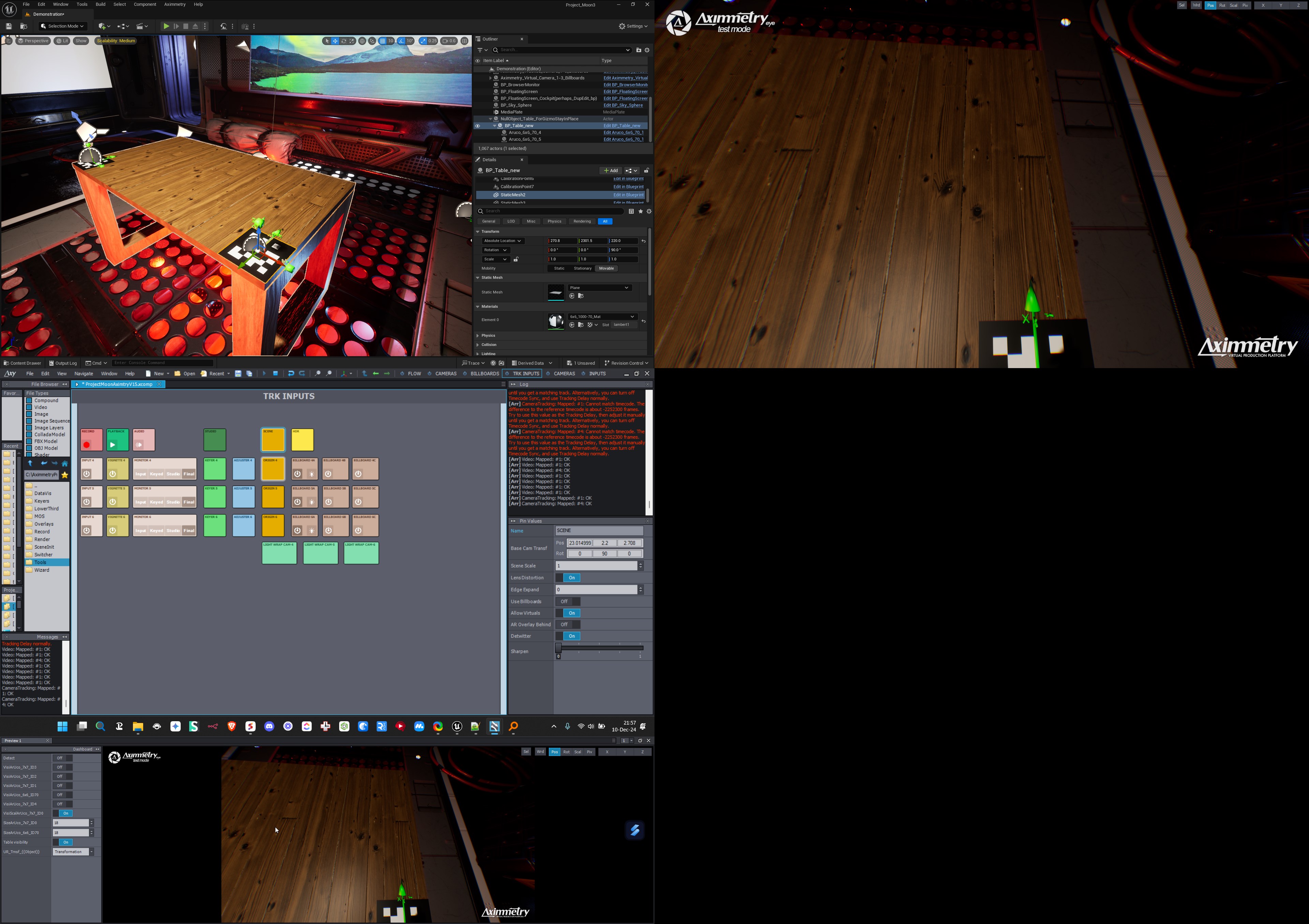

When using a virtual cam it's pretty simmple to place it where you want on the 3D scene, you go edit mode, you navigate, find you spot and "put in front" the billboard.

When using a tracked cam, I know you got to use the Scene Node to change origin. What is your workflow to reach the same position, or area ? How do you get orientations ? For exemple, I use a pretty large scene and the origin of my scene is randomly outside the building, I'm in full black and stuck with the FOV of my tracked lens. No eyemarks or reference points to know where to go in that sea of black :)

First, note that you can switch to "free camera" mode even if you're in a tracked camera compound. So that you can move the view around freely to understand how your scene is oriented and placed.

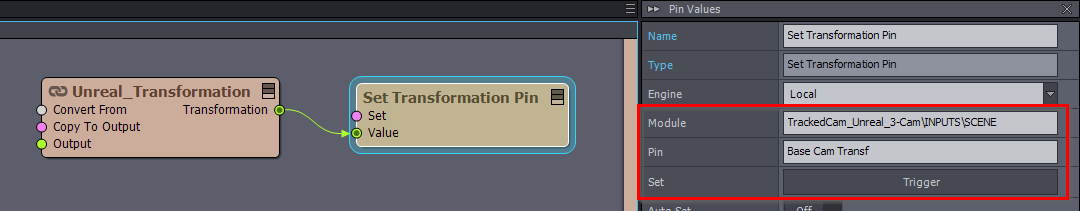

Then, I try to make sure the origin of my 3D scene is in a sensible spot in my scene (eg: in the middle of the set, not in a wall). Scene tab can help move things around.

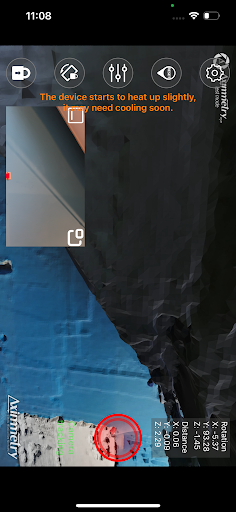

At last I set an aruco marker on the ground in the studio where I need the origin to be, and use the "detect origin" trigger to roughly place the cameras in place respective to the studio.