We have Aximmetry Broadcast DE on Win 11 Enterprise here, running 3 Cameras in and outputting 3 individual feeds out to a BMD ATEM for switching. Format is 1080p59,94 and both Cards and the switcher are genlocked to a BMD Sync Generator. Cards are 2x BMD Decklink 8K Pro (not G2).

There seem to be issues with the Drivers for these cards. The newest Version of BMD Desktop Video (do I even need this?) won't even recognize the cards, and older versions seem to have serious stability problems.

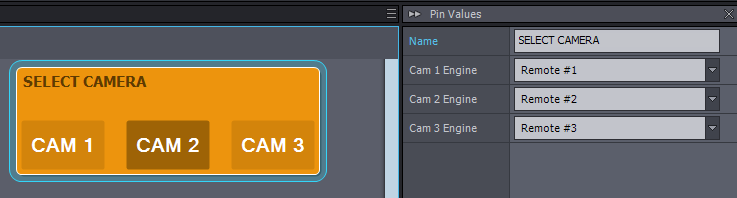

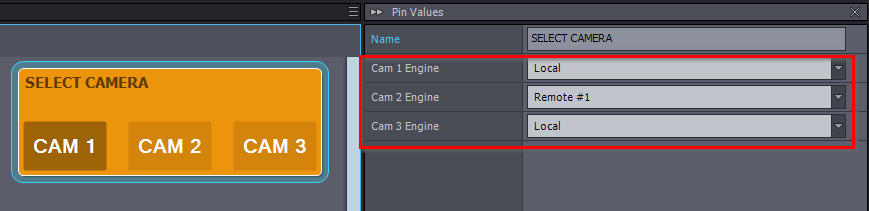

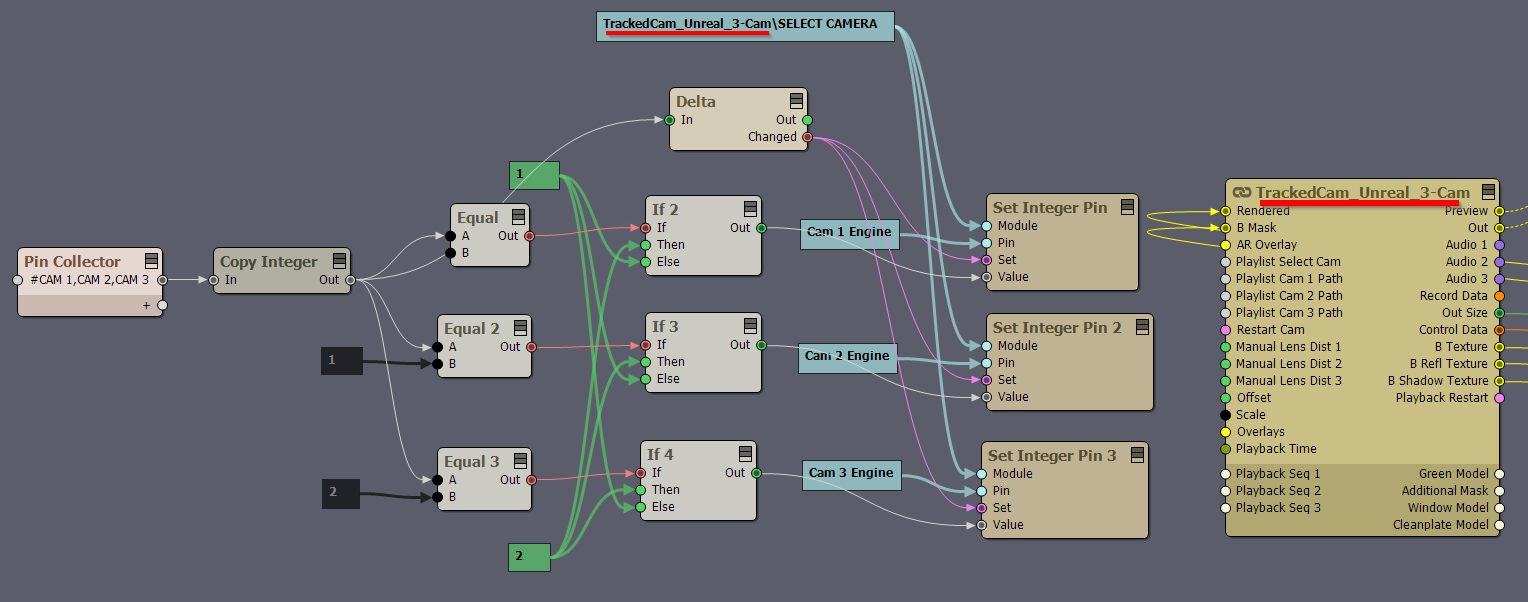

When you select a CAM in the Pin Collector, it will change that CAM to Remote #1 in the SELECT CAMERA panel and set the rest to Local.

When you select a CAM in the Pin Collector, it will change that CAM to Remote #1 in the SELECT CAMERA panel and set the rest to Local.

I wonder if this could instead be an issue with running two Decklink cards on the same machine. You could easily saturate the PCIE buss with all those video signals. Also, how are you outputting separate feeds for the cameras? Unreal can only render one camera at a time.