Hi, thanks for a great APP. I have tried using the iPad 11 pro with the Aximmetry Eye App and the overall experience was really good despite some minor jitters when moving the camera around. I use the iPad pro over the iphone as it doesnt overheat that easily.

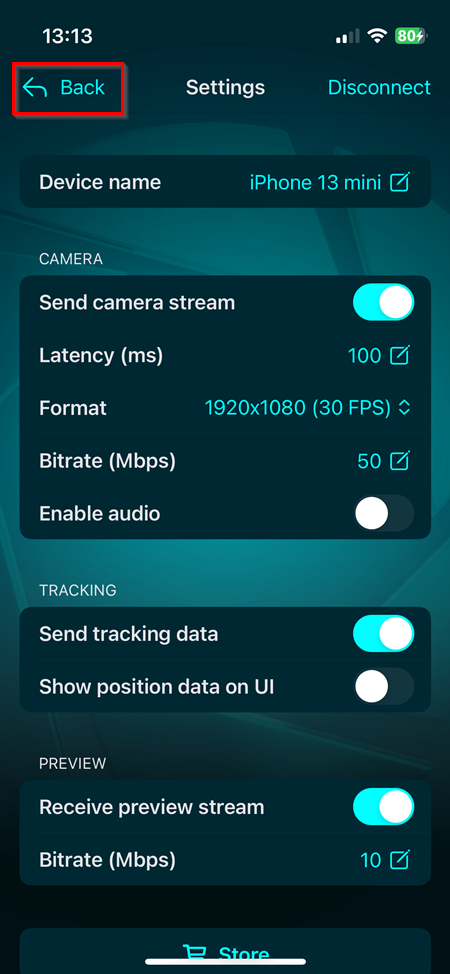

I then experiment mounting the iPad on a camera to use as a tracker and unfortunately it was drifting quite a bit. I do understand that the iPad lidar tracking is not for professional use but does using the additional Aximmetry camera calibrator help in this area? I'm on the free Studio DE version currently.

For static shot, this ipad/camera combo is great though, no jitter/drift, rock solid.

Hi,

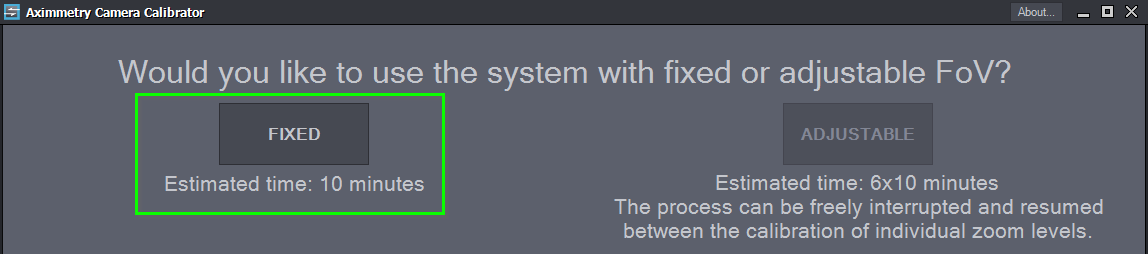

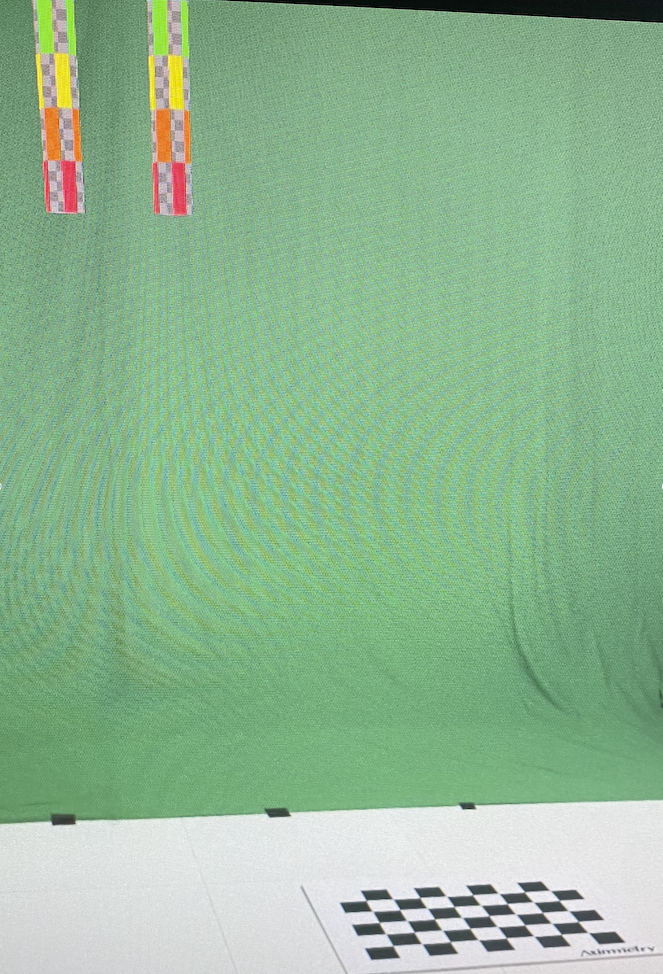

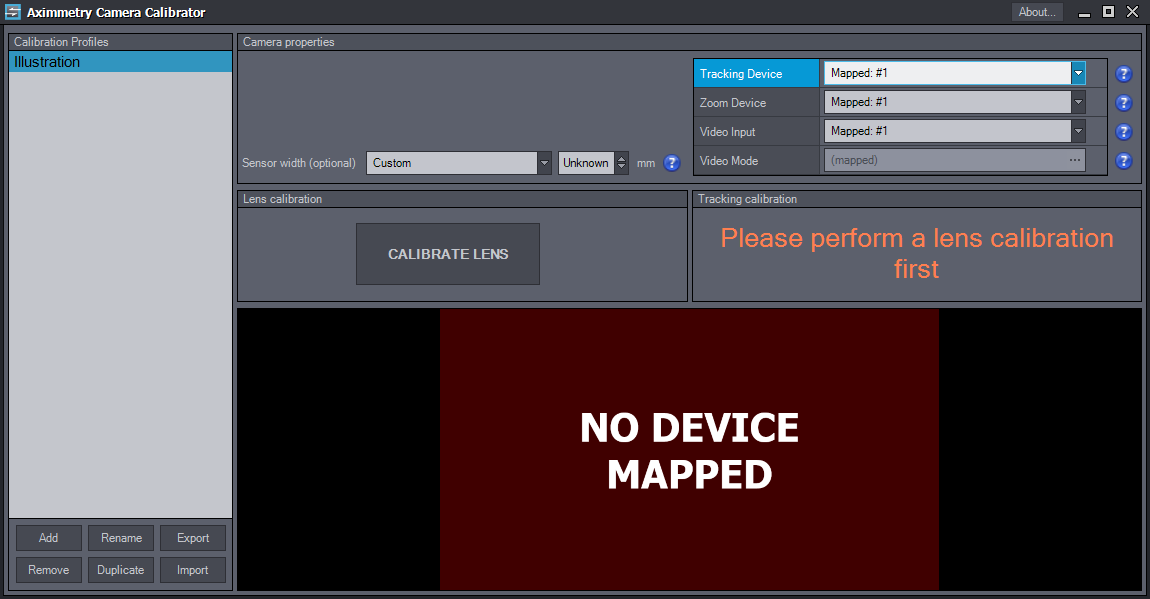

Yes, using the Aximmetry Camera Calibrator will help in reducing the drifting. This occurs because when you use Aximmetry Eye's tracking with a video input other than the phone's camera, Aximmetry does not know the camera's location relative to its tracking position. By utilizing the Camera Calibrator's tracking calibration, it calculates this misalignment, known as Delta Head Transform in Aximmetry. Although it's possible to manually specify the Delta Head Transform in Aximmetry by measuring this offset by hand, achieving a good level of precision, particularly in measuring the rotation of the offset, is very challenging. Furthermore, the Camera Calibrator can also do Lens calibration which can enhance realism.

Additionally, to minimize drifting you should also define the origin: https://aximmetry.com/learn/virtual-production-workflow/preparation-of-the-production-environment-phase-i/starting-with-aximmetry/aximmetry-eye/what-is-aximmetry-eye-and-how-to-use-it/#defining-the-null-point-of-the-virtual-enviroment

Warmest regards,