Hello everyone,

I'm interested in attempting virtual production using Mo-Sys's StarTracker and UE5. Although I am a beginner with Aximmetry, I am conducting tests using the trial version of the broadcast license.

I was able to set up UE and integrate it into Aximmetry following the tutorial below, but even when following the tutorial step-by-step, I see a red screen with "Device Not Found" displayed. https://aximmetry.com/learn/virtual-production-workflow/preparation-of-the-production-environment-phase-i/led-wall-production/using-led-walls-for-virtual-production/

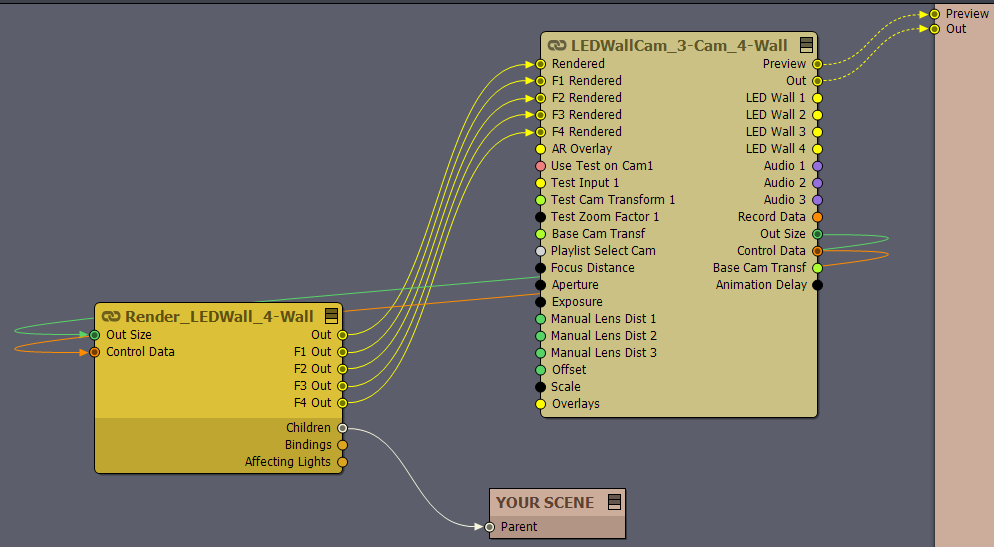

Additionally, I'm puzzled by the node labeled "YOUR SCENE" shown in the screenshots of the "Setting Up an Aximmetry Native Engine Scene" section of the tutorial. I assume it should be used with the SCENE NODE, but I couldn't find a detailed explanation.

I would greatly appreciate any advice or insights from anyone familiar with this. Thank you in advance.

The Device Not Found messsge usually means that you haven’t assigned a valid video input for the tracked camera.

The documentation and screenshot you’re referring to is (as per the title) for working with scenes using the native Aximmetry 3D engine and not Unreal scenes. Follow the documentation for working with UE5 scenes instead.