Hello everyone,

We have been working on live scene production using Aximmetry for several months, we are working with Unreal 5.2 and the latest version of Aximmetry.

Following our first production broadcast on French TV, we had a few questions to address to support:

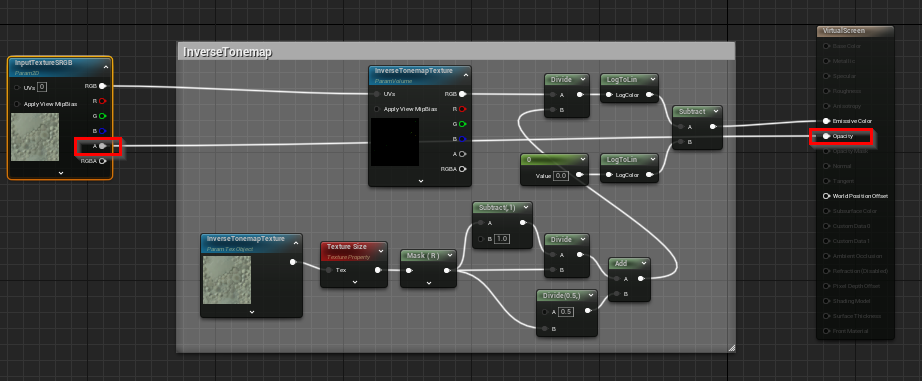

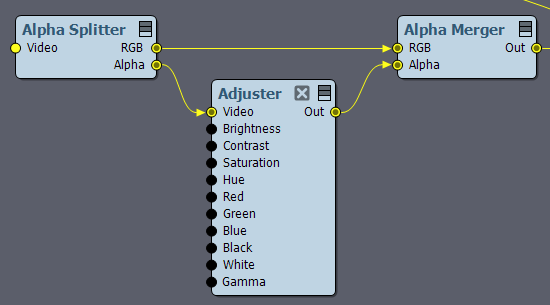

On this project we had the constraint of working with virtual screens on our scene whose flows contained an Alpha channel, so we followed the tutorial and BPs from Eiffert (https://my.aximmetry.com/post/3164- virtual-screen-issue) and therefore worked with the textures in Invert Tonemap.

Using his technique, we realized that the quality of display of the video stream in the virtual screen is much more qualitative than when we use the "classic" method present in the Aximmetry documentation (the typographies are detailed, without alliasing, and overall the details of the image actually correspond to the sources that the graphic designers had provided us).

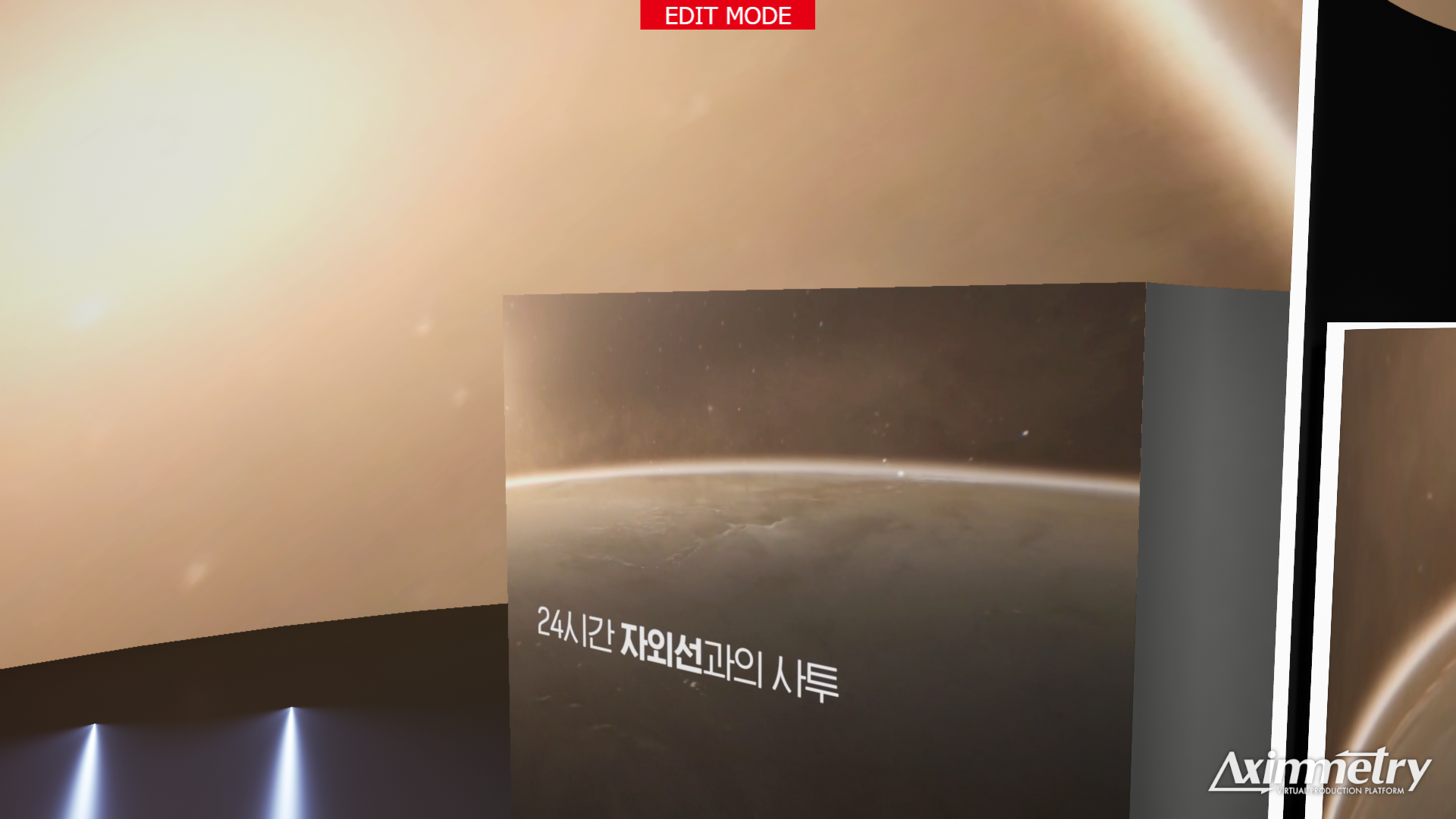

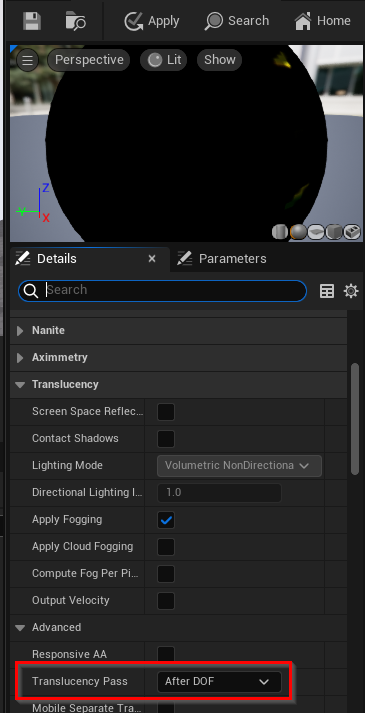

Using this technique, we encountered aliasing problems at the edge of the image (ref img1) and display problems when two screens are in front of each other (ref img2).

img1

img2

What are your recommendations for texturing work on broadcast screens with Alpha?

In addition, do you think you will rework your tutorial concerning the display of video elements in a manner faithful to the incoming stream?

Thanks in advance,

The aliasing comes from the fact that anti aliasing (which is causing the blurry text) is now turned off for the object. You could add a very small feathered edge to the screen plane to get around this somewhat. Either send the screen texture with alpha from Aximmetry (and connect the alpha to the material in Unreal as well) or created the soft feathered mask directly in the Unreal material.

The second issue looks like it’s caused by incorrect depth sorting in Unreal. Translucent materials have issues with depth sorting since they’re rendered in a separate pass. Hopefully @Eifert can provide a solid solution for this by maybe changing some material or mesh setting?