I'm trying to get to the bottom of a suitable solution for synchronising audio with the final output when rendering with unreal engine.

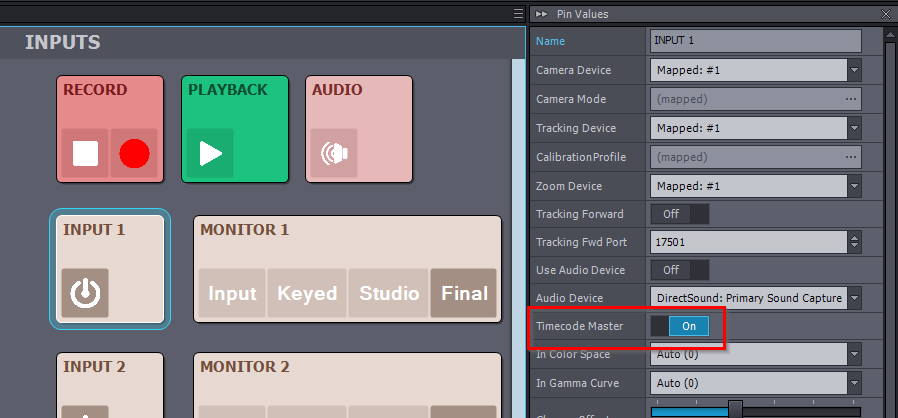

Obviously there's some delay in the system which (I assume) is defined by the frame latency in the properties menu. But the issue is that when we record the final output in aximmetry, while the audio has been delayed to be in sync, each recorded file will have the audio out of sync by a different amount.

We usually record audio separately anyway but since the scratch audio recorded to the clip is off by a varying amount it can make synchronising a fairly difficult task.

We've recently switched to a hyperdeck in order to record the final program feed externally, this is being fed the same input timecode as everything else in the system (camera, tracking data, audio recorder etc). We've yet to test it but I'm expecting the delta in the image delay to be constant which should make synchronising easier.

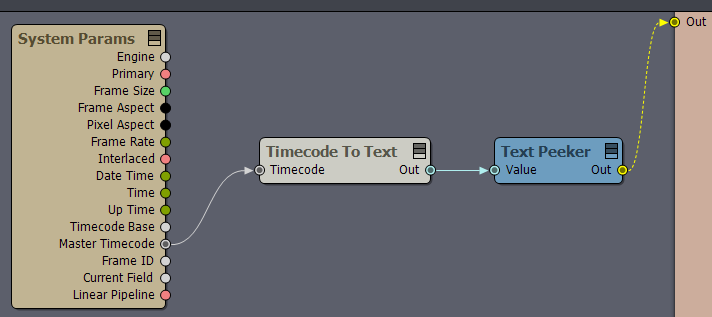

I'm wondering though if there's a way of 'delaying' the timecode coming into aximmetry to feed to the hyperdeck so that the timecode of the audio and timecode of the rendered video all matches up? would this delay just be the amount of frames we set in the properties? Or is there another way of finding it? I wonder if this would hep set a constant value for the tracking delay as well?

This would be great for later when we're doing post comp work and we can quickly align any rendered video to raw recorded data, visuals and audio.

Curious about this as well. Would you mind updating it here if you happen to find a solution?

Thanks!

Emil