The title is not so descriptive so let me explain

Inside Aximmetry, we have two ways to transfer values from one compound to another.

One is by cabling it, and another is by using a "set pin" compound.

The first binds the value from the source to the target. The second changes "on event" and enables multiple sources to affect the target.

The same approach should be taken, at least, for transformations from Aximmetry to Unreal.

What do we do now? We create a "Get Aximmetry Transformation" and then pass it to an object's "Set World Location", on every tick

Problems?

a) We fire a Set world location on every tick (even if Unreal will ignore it, it has to check all transformation parameters on every tick).

b) The worst part is that we are bound to one source of information. We cannot control the object, but from Aximmetry.

Proposed Solution.

It is better to stream commands to Unreal in the form of "command, tag, values".

Since we are talking about transformations, the command would be transform, the values the transformation passed, and the tag, the tagged objects in the editor.

The only thing the user would have to do is tag those actors that need to be affected.

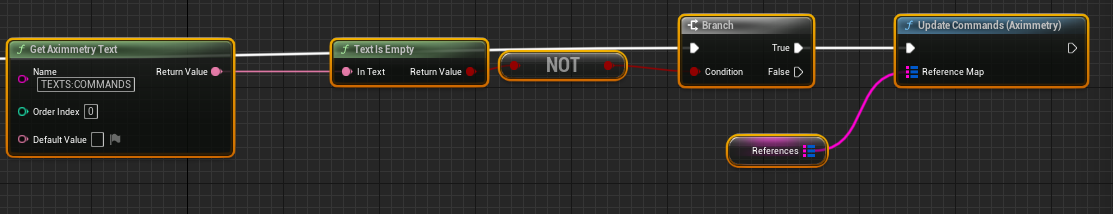

I have actually implemented such a system, the main line is like the following image shows

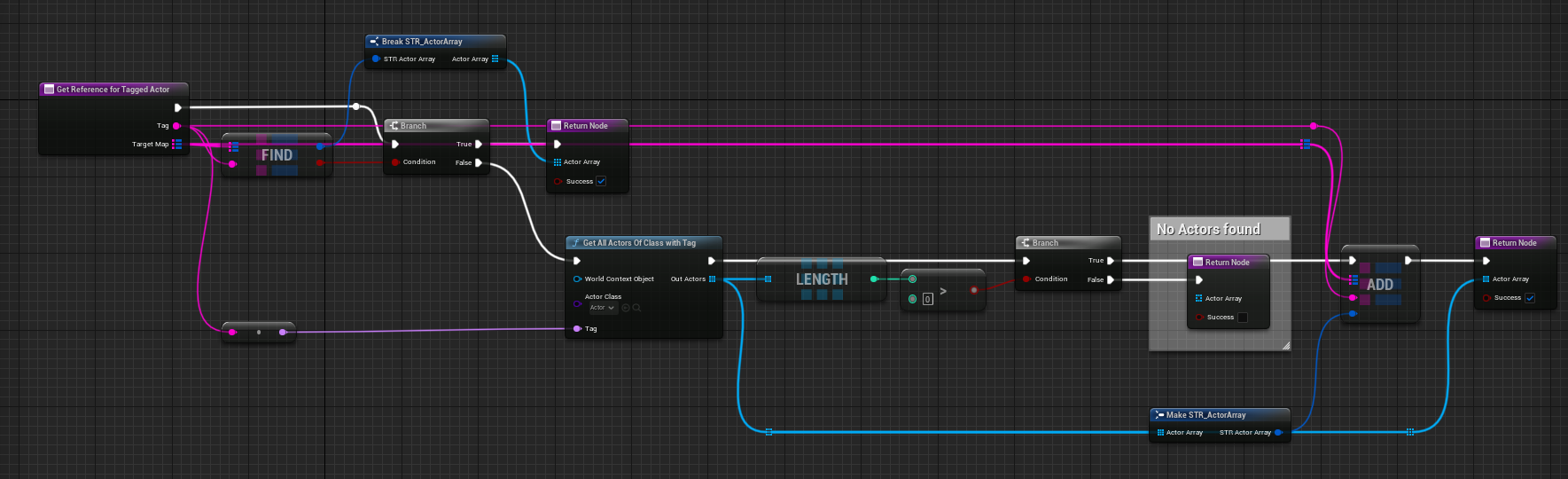

and the update processes all the commands and acts accordingly, like the following image shows

Especially for transformations, as you see, I use a reference map. This is purely for optimization, if we use those objects in animations and we need the performance.

What does it do? When we ask for the tagged object, it stores them in a map, so the next time, it will just retrieve them, and we have an O(1) performance on that part.

The benefit is that, going that way, we can both control where the object is inside Aximmetry as usual, but it can also be told (with a trigger or a user designed command) to follow a spline inside the map, something that we cannot do now. We have to choose.

This will solve the very frequently asked problem: can I move my camera on a path? Can you make a billboard follow a path?

Since the first part of the explanation is already possible in any case (although some syntactic sugar that will make it more compact would be welcomed), the real suggestion is migrating the Aximmetry Blueprints for Cameras and Billboards to that paradigm.

a) tag them; b) remove the bindings of the transformations (you do not need them, the central command processor will take care of the transformations). c) Instead of sending the transformations down to some transformation channels, as we do now, just pack them in command format (i.e., TRANSFORM, TAG, VALUE) and send them down a text wire, or a custom wire if you want to be more performant.

You will have

a) More performance (when the cameras and billboards do not move) or the same

b) Solve the problem of moving object both inside Aximmetry and inside Unreal

The whole idea of communicating with commands instead of binding is also more portable. You just tag what is affected and 99% of the project remains the same

I would be glad to hear your feedback

PS.

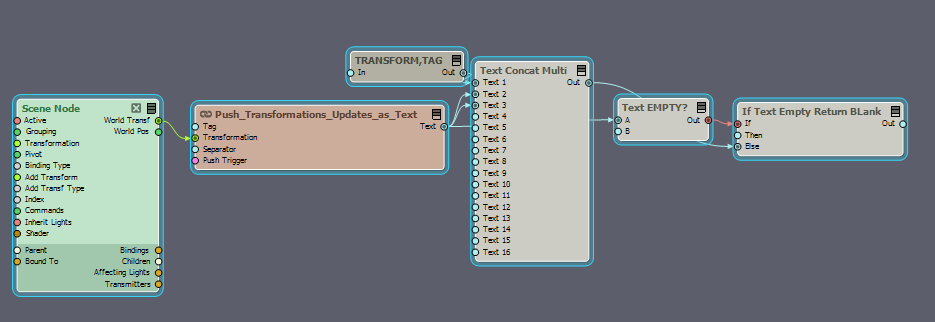

It's very easy to create a "transform command." Example following on the image attached.

The push transformations as text compounds have been shared here in the forum a few posts before.

This group just pushes transformation commands as soon as changes happen on the scene node.

Hi Buffos,

Thank you for the suggestion/tutorial.

Please note that you barely save any resources by not setting the world position at every tick. You probably would need to control thousands of Actors to notice a performance difference and even then the text parsing probably would cost more resources. However, you save on the number of pins your Unreal node will have in Aximmetry, so you will not run into the maximum pin limit of nodes.

And also, note that if you set the camera's or the billboard's position in Unreal, then some functionality of Aximmetry cameras will fail to work, for example, light warp. We have on our requests to make two-way communication possible between Unreal and Aximmetry that will enable us to fix such issues.

For anyone reading, Buffos made a tutorial that is similar to this post here: https://my.aximmetry.com/post/2900-tutorial-dynamic-texturing-an-object-on

Warmest regards,