Hi there.

Axy seems to prefer .dds CubeMaps over LatLon HDR images for stuff like EnvMaps in PBR Shaders, Skybox etc. At least I haven's found a way to use a LatLon HDR on PBR Shaders, they just return black.

Maybe I'm missing something, but there doesn't seem to be a LatLon-to-CubeMap converter in Axy,

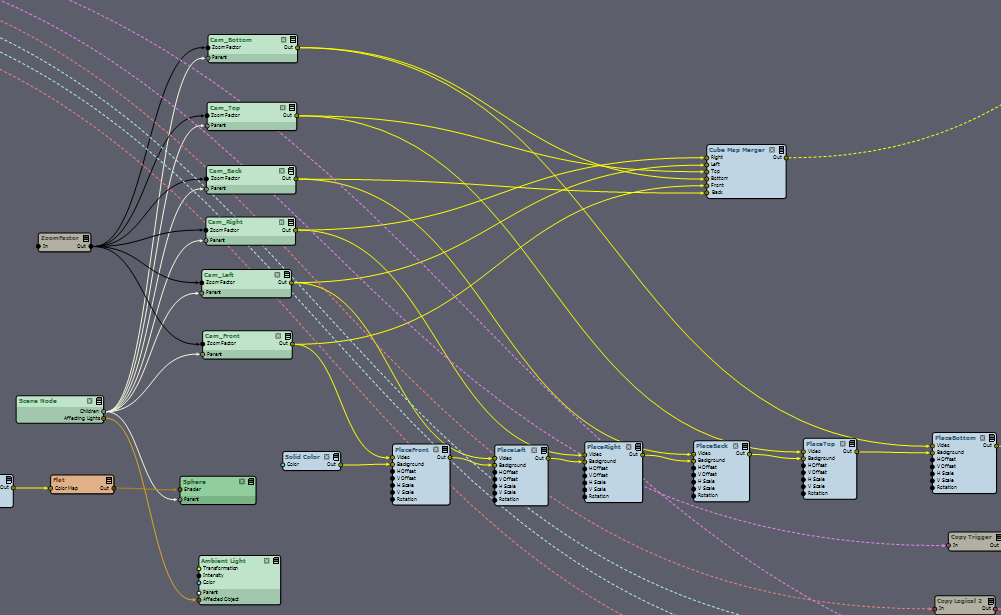

so I whacked together my own

Basically that good ole' six-cameras-in-a-sphere setup.

But even when I turn on HDR in the cameras, the rendered image is clipped to 16Int, which renders (ha!) the entire idea of having 32Float HDRs useless.

Is there any way to get 32Float images out of a render setup?

Cheers & all the best.

Eric.

Hi Eric,

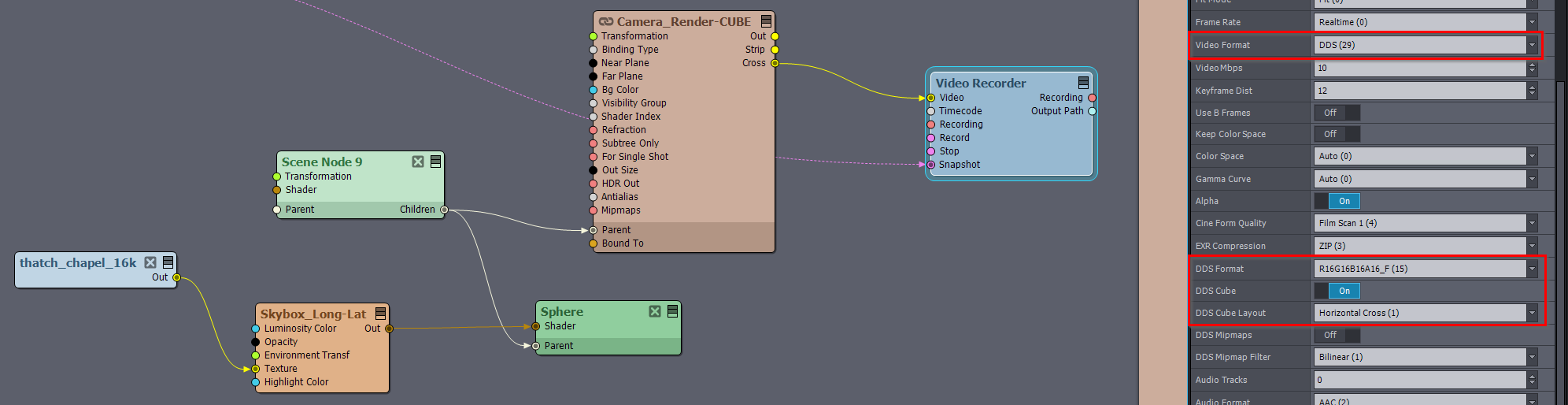

There is no specific LatLon-to-CubeMap converter in Aximmetry. But there is a Cubemap render compound at [Common]:Compounds\Camera\Camera_Render-CUBE.xcomp that you could have used up to make your converter.

Also, you probably want to use the [Common]:Shaders\Terrain\Skybox_Long-Lat.xshad shader like above on the sphere to get a perfect mapping. And turn On Two Sided in the Sphere module.

Also, you probably want to use the [Common]:Shaders\Terrain\Skybox_Long-Lat.xshad shader like above on the sphere to get a perfect mapping. And turn On Two Sided in the Sphere module.

Like you could make this setup to save the environment map:

For example, this Skybox_Long-Lat shader has to be used to render a sky sphere from LatLon images.

There is a runtime example of virtual scene environment map generation at: [Tutorials]:Advanced 3D\Auto EnvMap Gen\Auto EnvMap Gen.xcomp

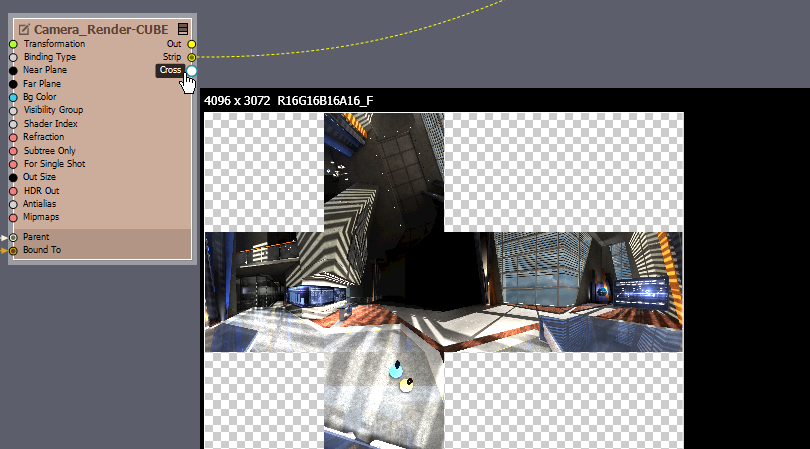

When HDR is turned on, the images are rendered in 16 bit float and not in 16 bit int. This should be visible when peeking at the rendered video, R16G16B16A16_F where F stands for float:

It is not like in PhotoShop where 16-bit means int and 32-bit means float.

There is no visible difference between 16 bit float and 32 bit float that is detectable by the human eye. So you shouldn't be concerned about 16 bit as long it is in float.

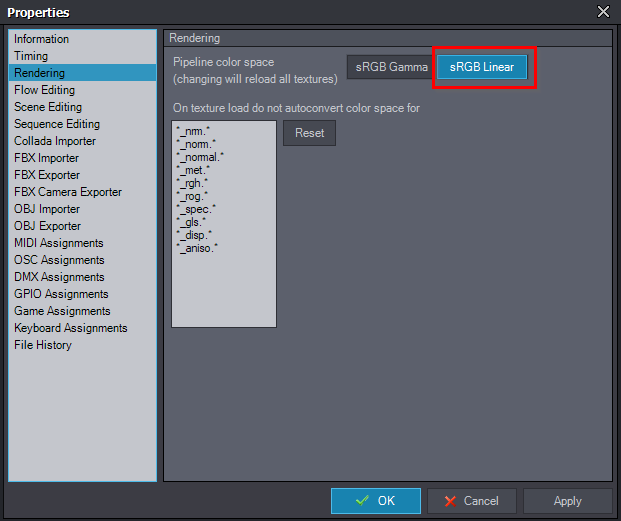

Also, make sure you are in Linear pipeline color space:

Otherwise, you will not get the best out of your high-quality images or textures.

And we have recently published a detailed documentation on how to make scenes in Aximmetry, including the PBR workflow and Environment Maps: https://aximmetry.com/learn/virtual-production-workflow/preparation-of-the-production-environment-phase-i/obtaining-graphics-and-virtual-assets/creating-content/creating-content-in-aximmetry-se/pbr-materials/

Warmest regards,