Hi Axy Support Team:

Unreal Engine adopt the timecode values from an input SDI video feed coming in from an AJA or Blackmagic capture card and lock its frame rate to that feed so that it generates only one frame of output for each frame of input.( from Unreal Engine Documentation) Timecode Provider, Custom Timestep and Timed data monitor are needed for this workflow. Is Aximmetry using the same workflow for genlocking camera feed, Engine and tracking data? So they actually can be synced without External genlock hardware?

Hi Zeketan,

Aximmetry uses a different workflow and things are named a bit differently.

An "external" genlock syncs the external devices (camera and tracking) to capture things at the same time. It won't specify the delay between your computer and camera and tracking. However, it makes the delay to be constant and the delay won't change over time. We have this on how to set up the delay between cameras and tracking: https://aximmetry.com/learn/tutorials/for-studio-operators/setting-up-virtual-sets-with-tracked-cameras/#inputs

What Unreal documentation refers to as genlocking Unreal: "In some cases, you may want to go even further, and lock the engine so that it only produces one single frame for each frame of video that comes in through a reference input — we refer to this as genlock." https://docs.unrealengine.com/4.27/en-US/WorkingWithMedia/IntegratingMedia/ProVideoIO/TimecodeGenlock/ is done automatically in Aximmetry.

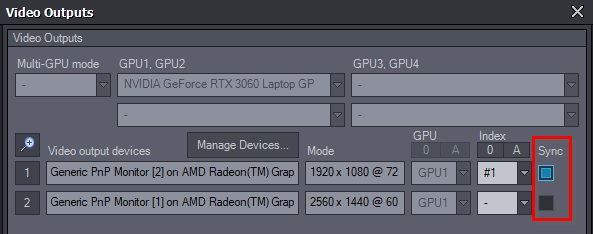

However, in most cases you want to actually sync your render frame rate to the output using the Sync option:

If you don't have "external" genlock, then it doesn't make much sense to render by timecode change, as your various devices will capture at different moments.

And in most cases, the timecodes from various devices are not synced by the genlock. But if you have such hardware which does that, then you can use the Timecode Sync option in the inputs to automatically sync the input (tracking and video) based on their timecode.

Timecodes are mostly used in post-process and you can record your device's timecode into the recorded video: https://aximmetry.com/learn/tutorials/for-studio-operators/recording-camera-tracking-data/#timecode

Warmest regards,