It's my understanding that Unreal engine Uses the ACES colour space within the editor and preview window. Does this carry through to Aximmetry? And if so what colour space profile are we getting when we output via the recorder?

I can also see that there's an option to switch between an sRGB and linear pipeline in Aximmetry but I can't tell how this is affecting tonemapping throughout the application. I assume it has an effect on any stills or video sources that have been loaded in?

Hi,

Unreal renders in linear color space while having a default sRGB workflow. You can convert to ACES using tone mapping in post-process volume and HDR out: https://docs.unrealengine.com/4.26/en-US/RenderingAndGraphics/PostProcessEffects/ColorGrading/ (you will probably have to do much more than what is written in that documentation)

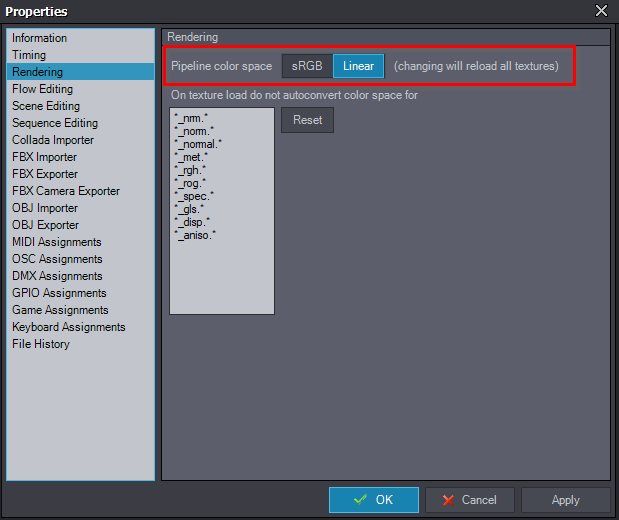

You can switch between sRGB and Linear in Aximmetry and it will automatically change Unreal's 10-bit rendering to 16-bit.

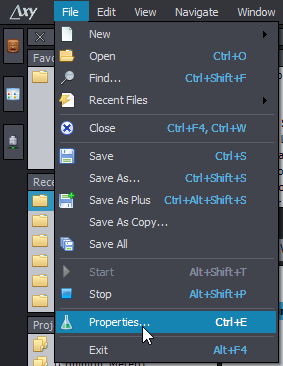

To change to Linear, go to Properties:

Rendering -> Pipeline color space:

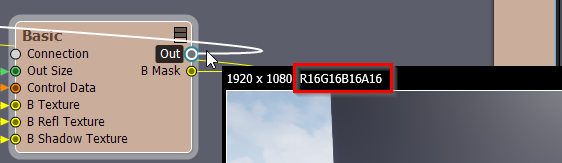

You can check the color bit by peeking (CTRL + mouse hover) at the video coming from Unreal:

Note, even when Unreal uses 10-bit, Aximmetry will convert the video coming from Unreal and work with 16-bit videos.

Warmest regards,