Hi,

As you can see, we are using VIVE Tracker to interact with the water surface. The position of the characters on the image and the water ripples are not synchronized (actually I am using the Tracker to swing a hand up and down to cause the water ripples) The movement of the real person on the green screen and the movement of the Tracker in the virtual scene are very different. Difficult to match (splashes can only be controlled by artificial swing or OSC, no matter which one is currently not very accurate). So I want to ask if there are parameters or methods that can be calibrated in V Cam and T Cam to make the movement automated and precise. I am very grateful for your responses.

Hi,

If I understand correctly, you use the Vive tracker's position to interact with the water.

There many delay parameters and modules that can help you to make everything to be in sync:

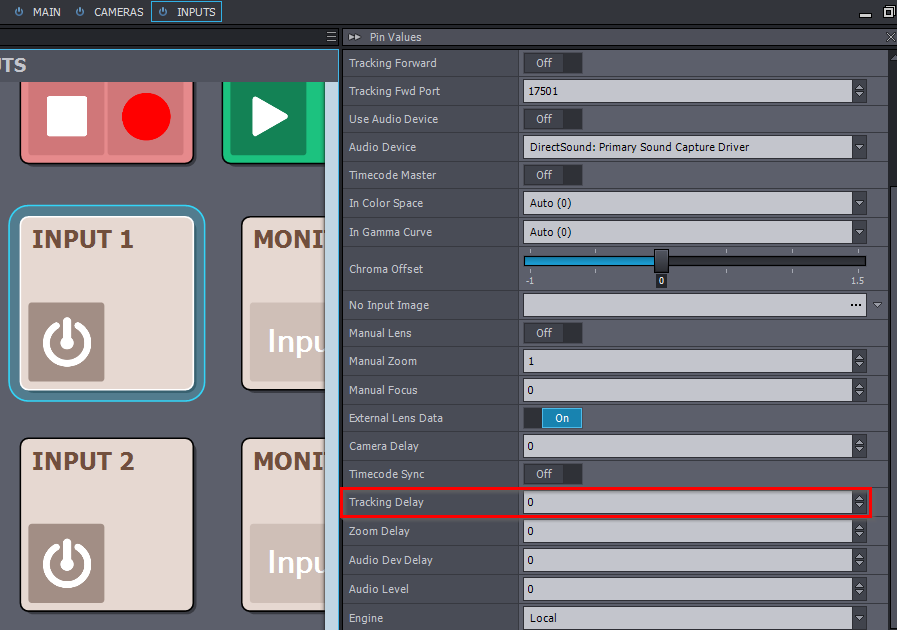

Tracked Camera:

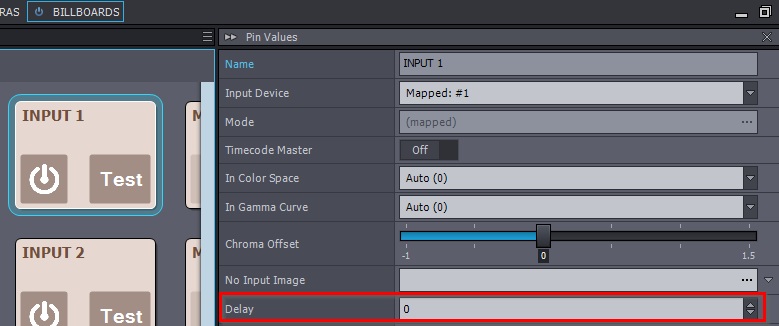

Virtual Camera:

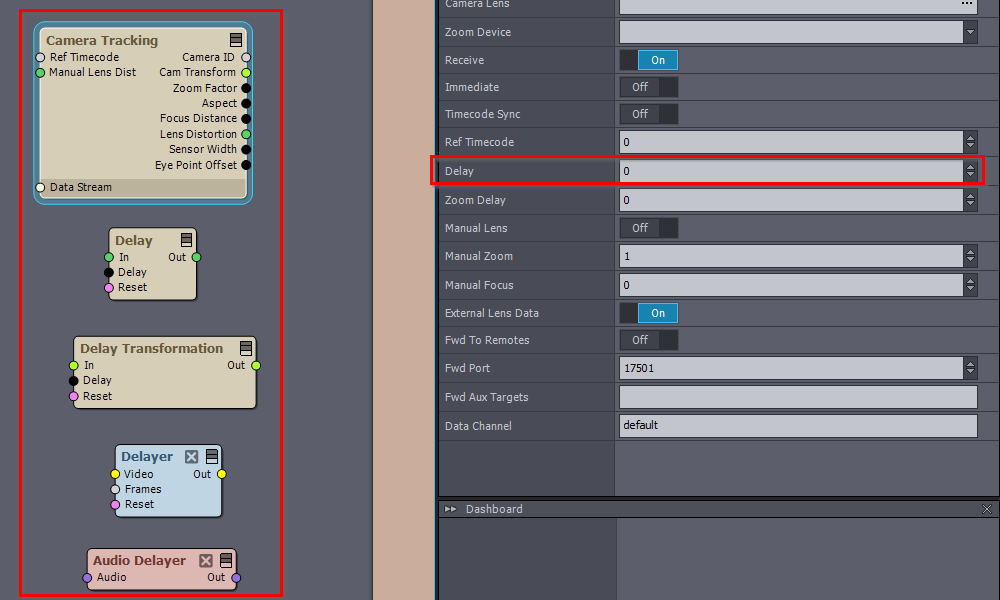

Modules:

You probably want to use the Camera tracking module to receive the tracking data of the Vive tracker in Aximmetry and connect that transformation to Unreal. More on passing Transformation to Unreal for Aximmetry: https://aximmetry.com/learn/tutorials/for-aximmetry-de-users/how-to-install-and-work-with-the-unreal-engine-based-de-edition/#passing-data-from-aximmetry-to-unreal

Warmest regards,