Hello. I’m a student studying Aximmetry at school.

I'm currently working on a green screen-based virtual production setup using Aximmetry and Unreal Engine, and I have a few questions regarding a multi-machine configuration. I would appreciate any guidance or corrections based on your experience.

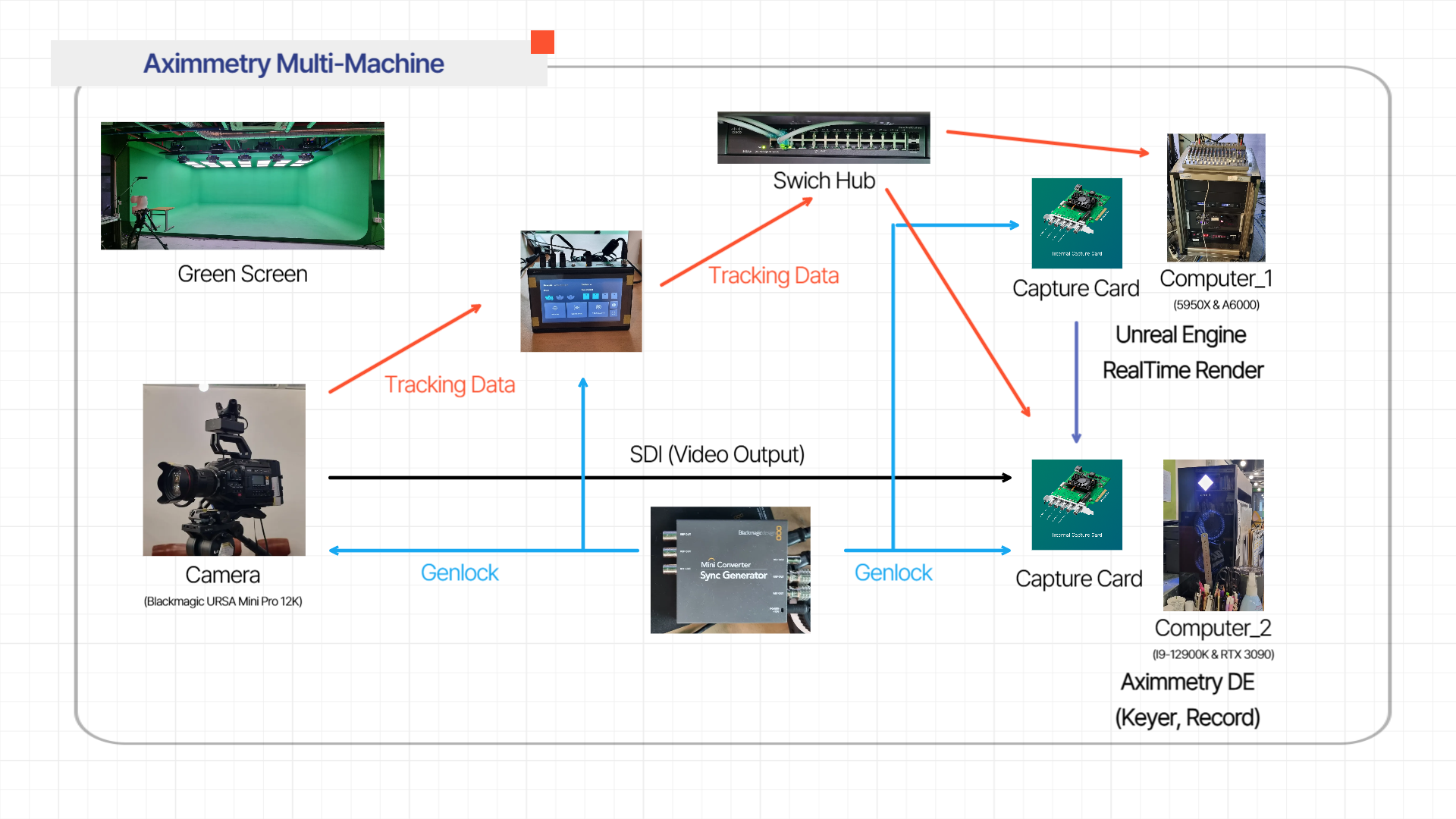

1. System Diagram Feedback

I've created a system diagram to outline how I'm planning to set up the machines, capture cards, and signal flow. If you notice any mistakes or areas for improvement, I’d be very grateful for your feedback.

2. Best Role Assignment for Each Machine

I have access to two computers:

Machine A: AMD Ryzen 9 5950X + NVIDIA RTX A6000

Machine B: Intel i9-12900K + NVIDIA RTX 3090

Based on performance and compatibility, which machine should run Unreal Engine, and which should run Aximmetry for optimal performance in a green screen virtual production workflow?

3. Capture Cards on Both Machines?

In a multi-machine setup (e.g., one machine for Aximmetry control, another for UE rendering), do both computers need capture cards, or can only one handle the SDI/NDI input/output?

4. Is Genlock Required in Green Screen Setups?

I understand that Genlock is essential in LED volume environments, but is it still necessary for chroma key setups, especially when using SDI cameras and multiple machines?

Thanks in advance for any insights or advice. Looking forward to hearing your thoughts!

Why would you run Unreal on a separate workstation? Unreal scenes open directly in Aximmetry, and that is a big point of the whole system. Your proposed setup is needlessly complicated and will miss a lot of functionality you get when rendering the UE5scene inside Aximmetry.

Regarding genlock, you will need to genlock the camera and Vive Mars tracking system. With a high frame rate tracking system like ReTracker Bliss you won’t need it, since the tracking data is locked with the camera video input in Aximmetry .