Hi!

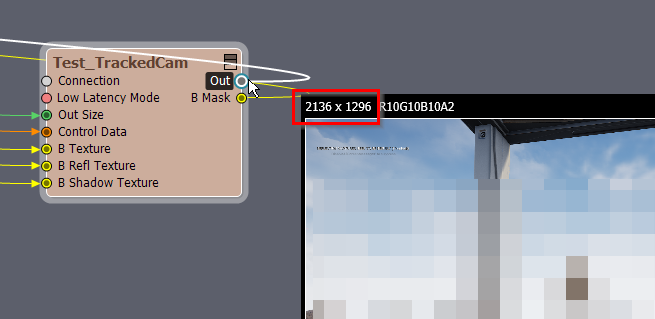

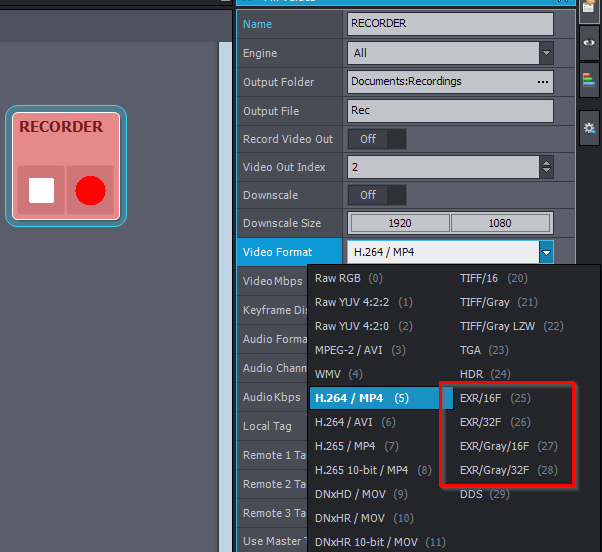

Is it possible to offline render in Aximmetry using the Path Tracer in UE?

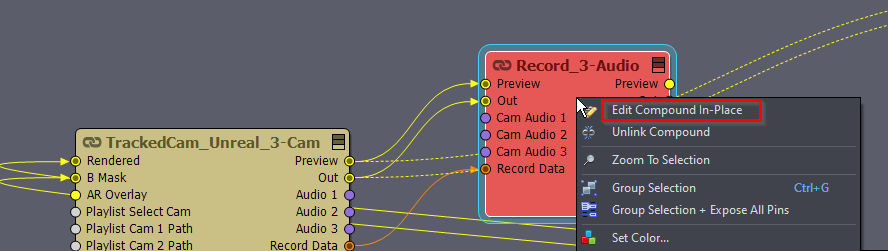

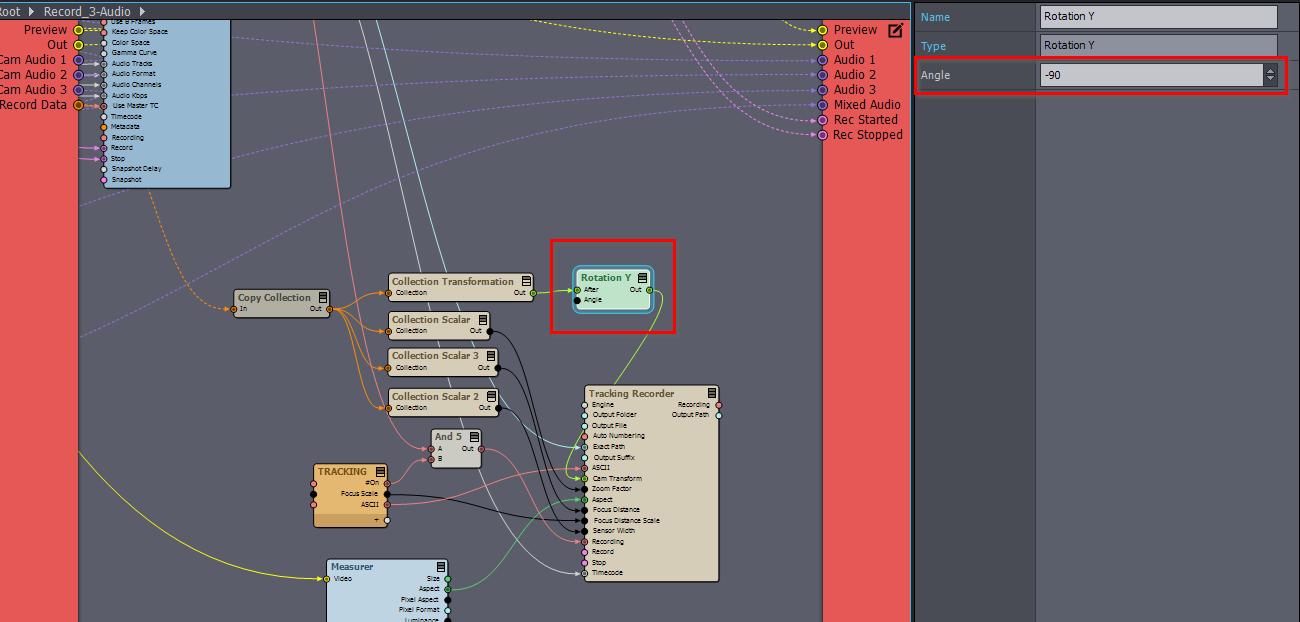

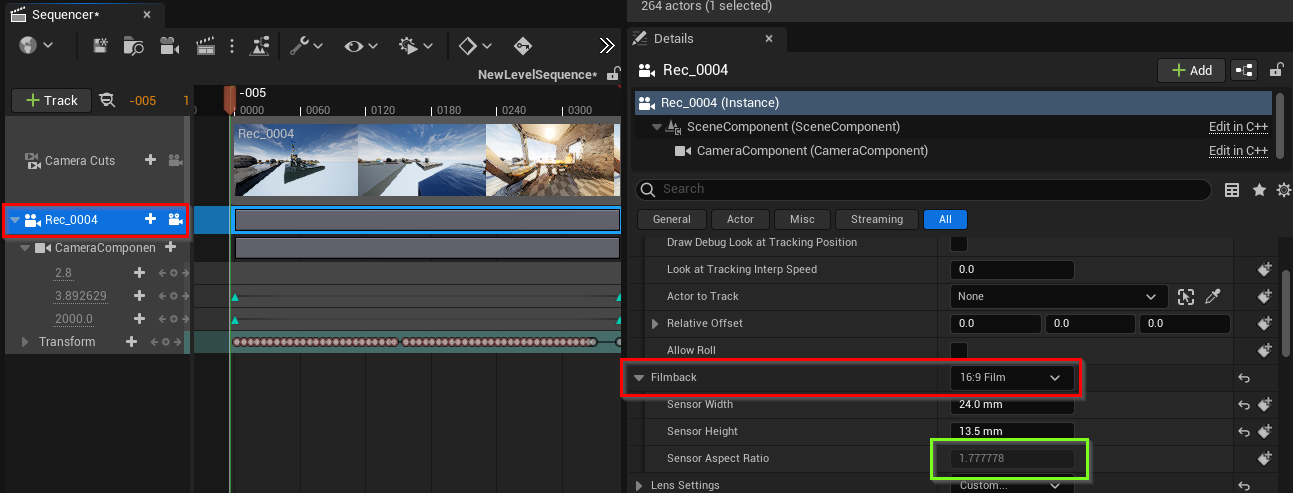

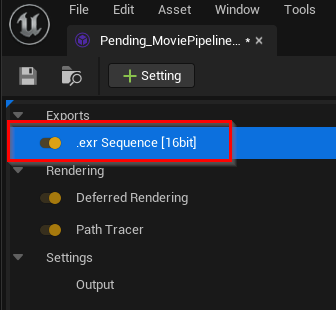

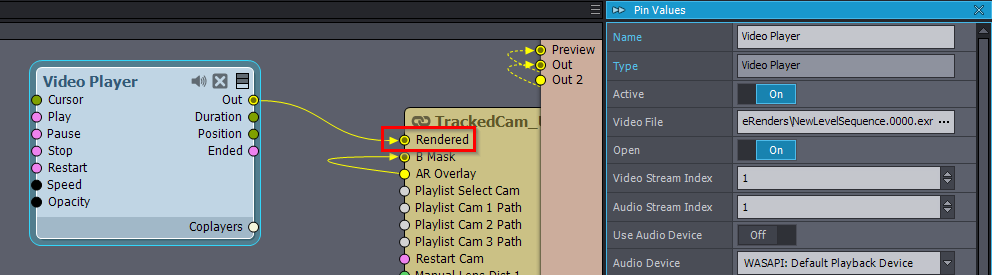

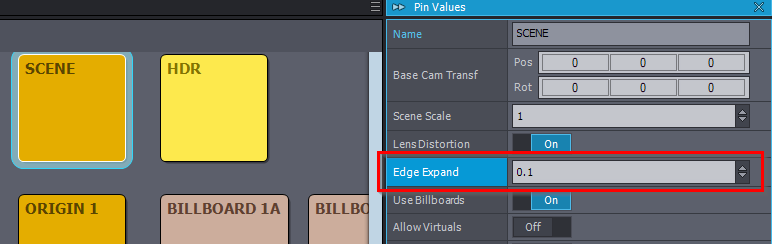

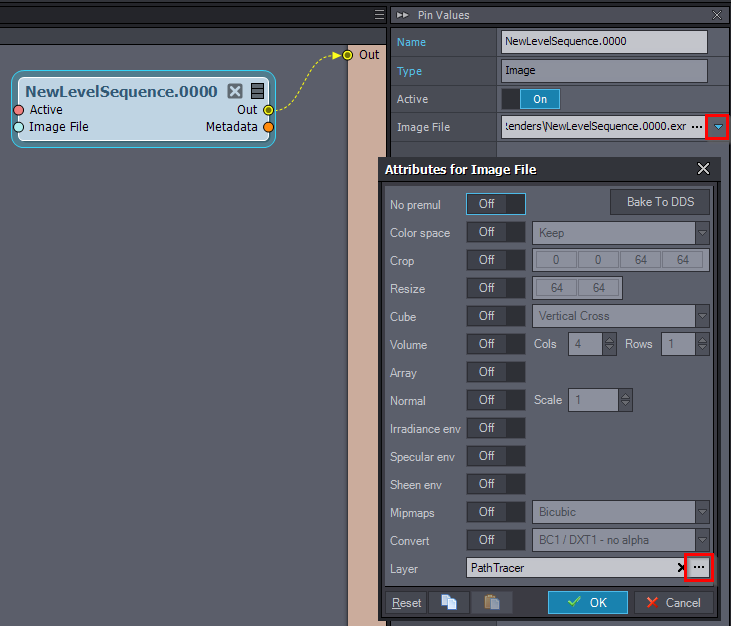

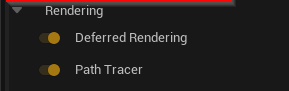

If not directly, is there any kind of workaround? Idea would be to use a lighter setting of the scene with Lumen or baked lighting for example and record the tracking data. Then later offline render the same scene with the Path Tracer for best results.

Even just the UE background alone straight from UE without the comp/actors would be perfect.

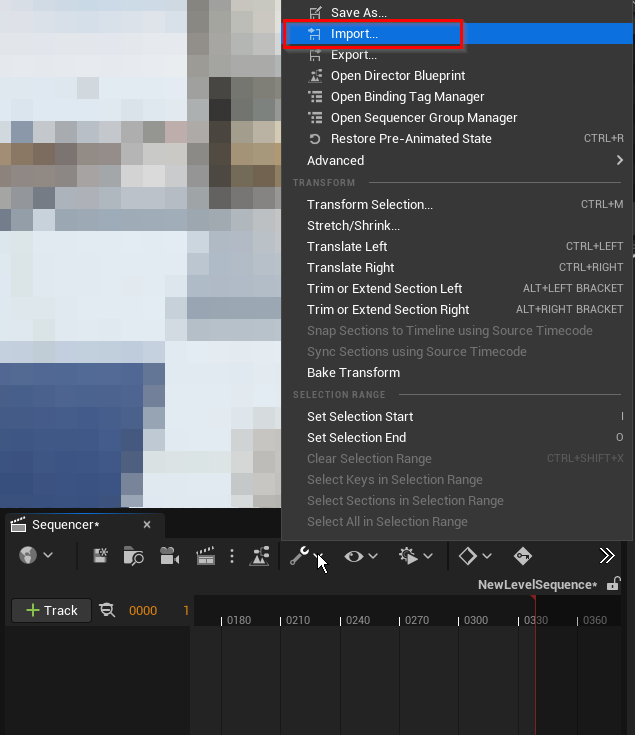

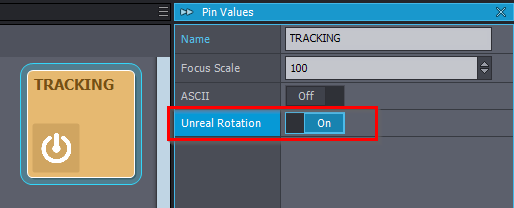

This would be easy to do in UE if the tracking data could be properly imported to UE but I guess it's still not possible to export st maps with lens distortion data to accompany the fbx files so there is still no solution for that? If not, I hope there is a way to do this with the tracking data inside Aximmetry.

Emil

A little bumber.

Ue5.3 was just announced and the Path Tracer is gonna get even more love so this topic is getting more and more critical with future updates.

Eifert? :P Anyone? Thanks in advance!

Emil